Generating Automatic Curricula via Self-Supervised Active Domain Randomization

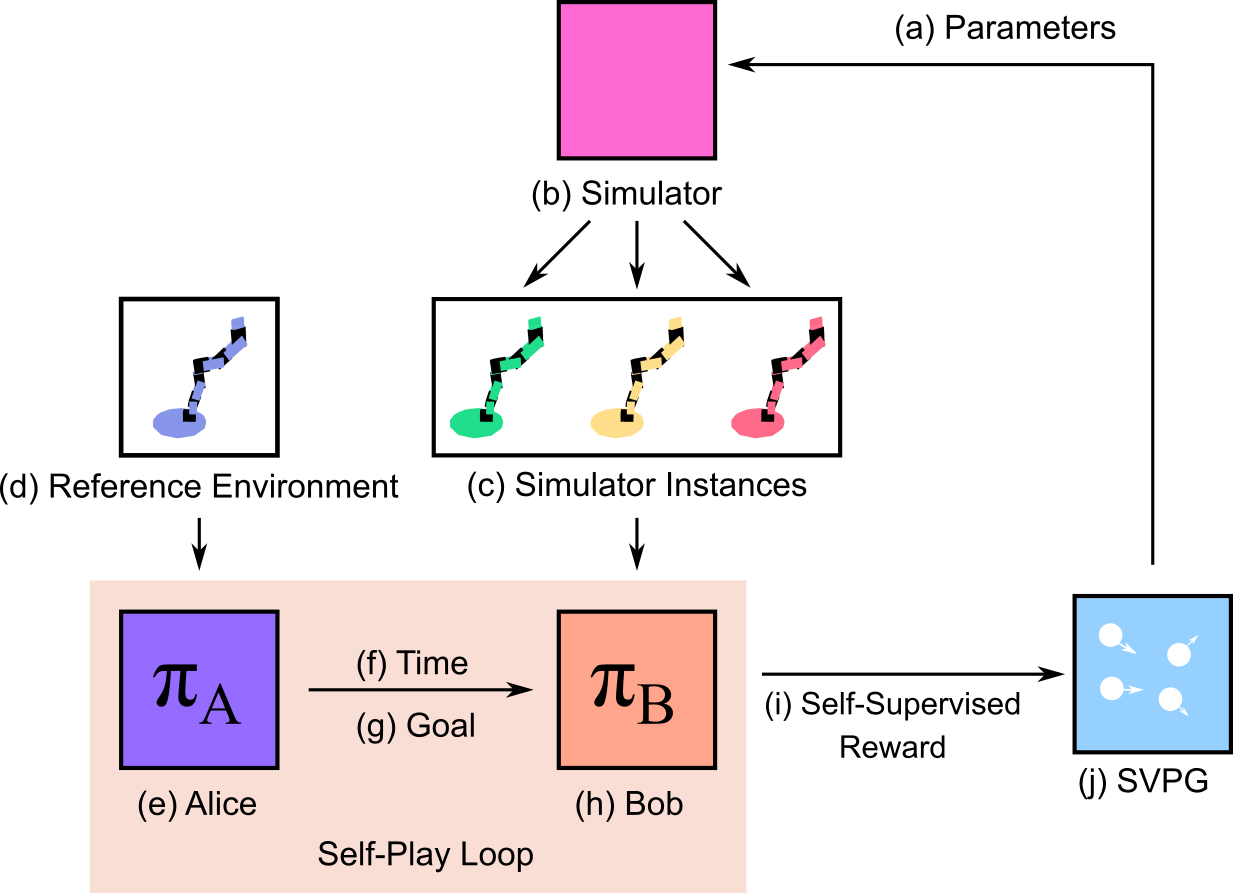

Goal-directed Reinforcement Learning (RL) traditionally considers an agent interacting with an environment, prescribing a real-valued reward to an agent proportional to the completion of some goal. Goal-directed RL has seen large gains in sample efficiency, due to the ease of reusing or generating new experience by proposing goals. One approach,self-play, allows an agent to "play" against itself by alternatively setting and accomplishing goals, creating a learned curriculum through which an agent can learn to accomplish progressively more difficult goals. However, self-play has been limited to goal curriculum learning or learning progressively harder goals within a single environment. Recent work on robotic agents has shown that varying the environment during training, for example with domain randomization, leads to more robust transfer. As a result, we extend the self-play framework to jointly learn a goal and environment curriculum, leading to an approach that learns the most fruitful domain randomization strategy with self-play. Our method, Self-Supervised Active Domain Randomization(SS-ADR), generates a coupled goal-task curriculum, where agents learn through progressively more difficult tasks and environment variations. By encouraging the agent to try tasks that are just outside of its current capabilities, SS-ADR builds a domain randomization curriculum that enables state-of-the-art results on varioussim2real transfer tasks. Our results show that a curriculum of co-evolving the environment difficulty together with the difficulty of goals set in each environment provides practical benefits in the goal-directed tasks tested.

PDF Abstract