Generating Anthropomorphic Phantoms Using Fully Unsupervised Deformable Image Registration with Convolutional Neural Networks

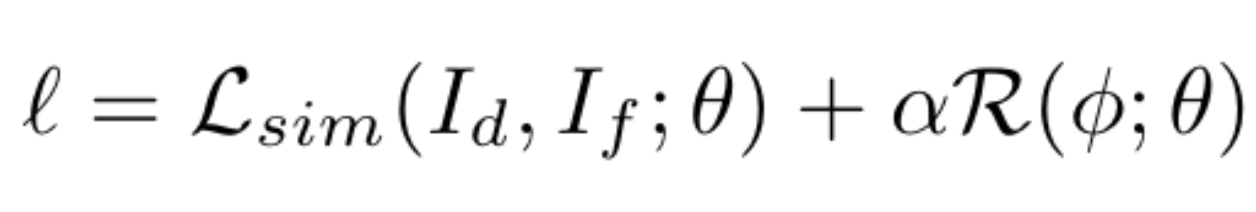

Objectives: Computerized phantoms play an essential role in various applications of medical imaging research. Although the existing computerized phantoms can model anatomical variations through organ and phantom scaling, this does not provide a way to fully reproduce anatomical variations seen in humans. However, having a population of phantoms that models the variations in patient anatomy and, in nuclear medicine, uptake realization is essential for comprehensive validation and training. In this work, we present a novel image registration method for creating highly anatomically detailed anthropomorphic phantoms from a single digital phantom. Methods: We propose a deep-learning-based registration algorithm to predict deformation parameters for warping an XCAT phantom to a patient CT scan. This proposed algorithm optimizes a novel SSIM-based objective function for a given image pair independently of the training data and thus is truly and fully unsupervised. We evaluate the proposed method on a publicly available low-dose CT dataset from TCIA. Results: The performance of the proposed model was compared with that of several state-of-the-art methods, and outperformed them by more than 8%, measured by the SSIM and less than 30%, by the MSE. Conclusion: A deep-learning-based unsupervised registration method was developed to create anthropomorphic phantoms while providing "gold-standard" anatomies that can be used as the basis for modeling organ properties. Significance: Experimental results demonstrate the effectiveness of the proposed method. The resulting anthropomorphic phantom is highly realistic. Combined with realistic simulations of the image formation process, the generated phantoms could serve in many applications of medical imaging research.

PDF Abstract