Gravity Optimizer: a Kinematic Approach on Optimization in Deep Learning

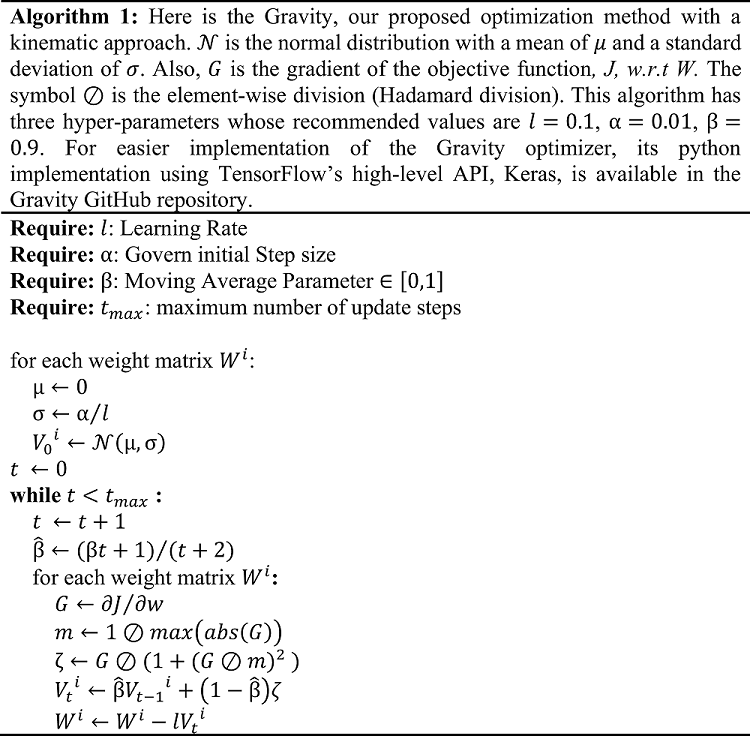

We introduce Gravity, another algorithm for gradient-based optimization. In this paper, we explain how our novel idea change parameters to reduce the deep learning model's loss. It has three intuitive hyper-parameters that the best values for them are proposed. Also, we propose an alternative to moving average. To compare the performance of the Gravity optimizer with two common optimizers, Adam and RMSProp, five standard datasets were trained on two VGGNet models with a batch size of 128 for 100 epochs. Gravity hyper-parameters did not need to be tuned for different models. As will be explained more in the paper, to investigate the direct impact of the optimizer itself on loss reduction no overfitting prevention technique was used. The obtained results show that the Gravity optimizer has more stable performance than Adam and RMSProp and gives greater values of validation accuracy for datasets with more output classes like CIFAR-100 (Fine).

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

MNIST

MNIST

Fashion-MNIST

Fashion-MNIST