"I Won the Election!": An Empirical Analysis of Soft Moderation Interventions on Twitter

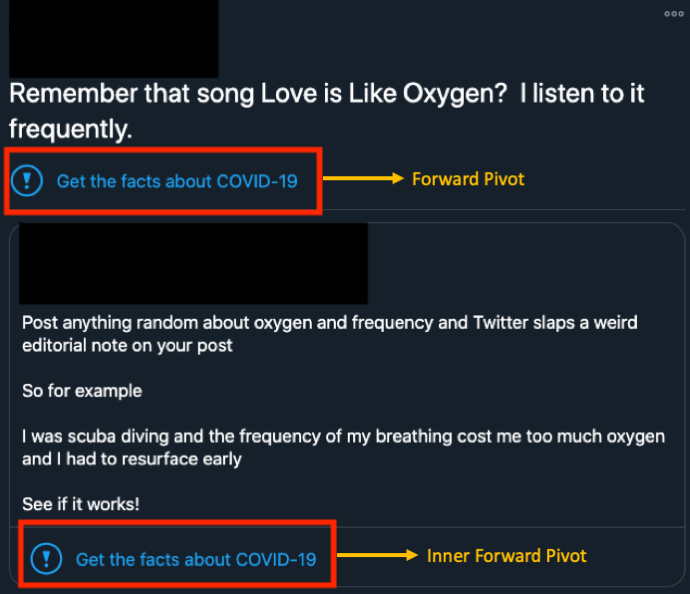

Over the past few years, there is a heated debate and serious public concerns regarding online content moderation, censorship, and the principle of free speech on the Web. To ease these concerns, social media platforms like Twitter and Facebook refined their content moderation systems to support soft moderation interventions. Soft moderation interventions refer to warning labels attached to potentially questionable or harmful content to inform other users about the content and its nature while the content remains accessible, hence alleviating concerns related to censorship and free speech. In this work, we perform one of the first empirical studies on soft moderation interventions on Twitter. Using a mixed-methods approach, we study the users who share tweets with warning labels on Twitter and their political leaning, the engagement that these tweets receive, and how users interact with tweets that have warning labels. Among other things, we find that 72% of the tweets with warning labels are shared by Republicans, while only 11% are shared by Democrats. By analyzing content engagement, we find that tweets with warning labels had more engagement compared to tweets without warning labels. Also, we qualitatively analyze how users interact with content that has warning labels finding that the most popular interactions are related to further debunking false claims, mocking the author or content of the disputed tweet, and further reinforcing or resharing false claims. Finally, we describe concrete examples of inconsistencies, such as warning labels that are incorrectly added or warning labels that are not added on tweets despite sharing questionable and potentially harmful information.

PDF Abstract