Image Generation from Scene Graphs

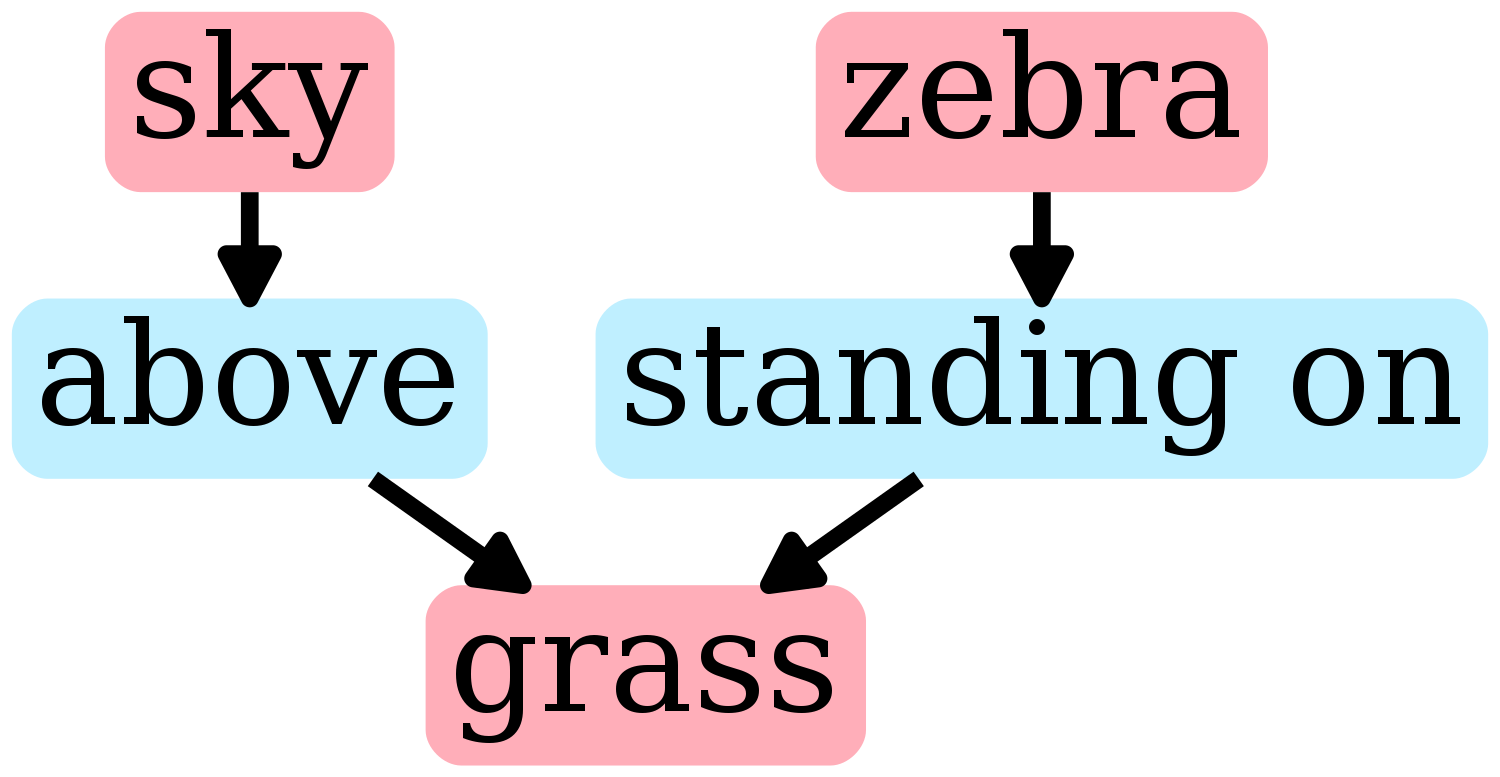

To truly understand the visual world our models should be able not only to recognize images but also generate them. To this end, there has been exciting recent progress on generating images from natural language descriptions. These methods give stunning results on limited domains such as descriptions of birds or flowers, but struggle to faithfully reproduce complex sentences with many objects and relationships. To overcome this limitation we propose a method for generating images from scene graphs, enabling explicitly reasoning about objects and their relationships. Our model uses graph convolution to process input graphs, computes a scene layout by predicting bounding boxes and segmentation masks for objects, and converts the layout to an image with a cascaded refinement network. The network is trained adversarially against a pair of discriminators to ensure realistic outputs. We validate our approach on Visual Genome and COCO-Stuff, where qualitative results, ablations, and user studies demonstrate our method's ability to generate complex images with multiple objects.

PDF Abstract CVPR 2018 PDF CVPR 2018 Abstract

MS COCO

MS COCO

Visual Genome

Visual Genome

COCO-Stuff

COCO-Stuff