Interaction Relational Network for Mutual Action Recognition

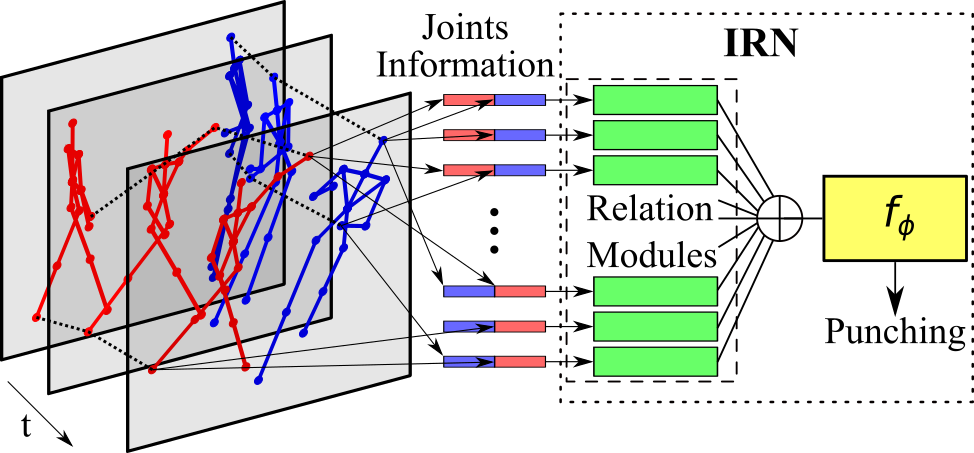

Person-person mutual action recognition (also referred to as interaction recognition) is an important research branch of human activity analysis. Current solutions in the field -- mainly dominated by CNNs, GCNs and LSTMs -- often consist of complicated architectures and mechanisms to embed the relationships between the two persons on the architecture itself, to ensure the interaction patterns can be properly learned. Our main contribution with this work is by proposing a simpler yet very powerful architecture, named Interaction Relational Network, which utilizes minimal prior knowledge about the structure of the human body. We drive the network to identify by itself how to relate the body parts from the individuals interacting. In order to better represent the interaction, we define two different relationships, leading to specialized architectures and models for each. These multiple relationship models will then be fused into a single and special architecture, in order to leverage both streams of information for further enhancing the relational reasoning capability. Furthermore we define important structured pair-wise operations to extract meaningful extra information from each pair of joints -- distance and motion. Ultimately, with the coupling of an LSTM, our IRN is capable of paramount sequential relational reasoning. These important extensions we made to our network can also be valuable to other problems that require sophisticated relational reasoning. Our solution is able to achieve state-of-the-art performance on the traditional interaction recognition datasets SBU and UT, and also on the mutual actions from the large-scale dataset NTU RGB+D. Furthermore, it obtains competitive performance in the NTU RGB+D 120 dataset interactions subset.

PDF Abstract

NTU RGB+D

NTU RGB+D

NTU RGB+D 120

NTU RGB+D 120

SBU

SBU

UT-Interaction

UT-Interaction