Interpretable Mixture of Experts

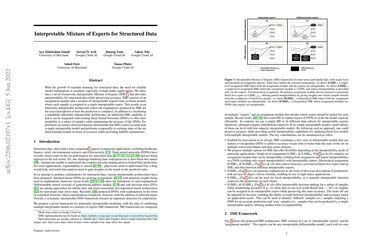

The need for reliable model explanations is prominent for many machine learning applications, particularly for tabular and time-series data as their use cases often involve high-stakes decision making. Towards this goal, we introduce a novel interpretable modeling framework, Interpretable Mixture of Experts (IME), that yields high accuracy, comparable to `black-box' Deep Neural Networks (DNNs) in many cases, along with useful interpretability capabilities. IME consists of an assignment module and a mixture of experts, with each sample being assigned to a single expert for prediction. We introduce multiple options for IME based on the assignment and experts being interpretable. When the experts are chosen to be interpretable such as linear models, IME yields an inherently-interpretable architecture where the explanations produced by IME are the exact descriptions of how the prediction is computed. In addition to constituting a standalone inherently-interpretable architecture, IME has the premise of being integrated with existing DNNs to offer interpretability to a subset of samples while maintaining the accuracy of the DNNs. Through extensive experiments on 15 tabular and time-series datasets, IME is demonstrated to be more accurate than single interpretable models and perform comparably with existing state-of-the-art DNNs in accuracy. On most datasets, IME even outperforms DNNs, while providing faithful explanations. Lastly, IME's explanations are compared to commonly-used post-hoc explanations methods through a user study -- participants are able to better predict the model behavior when given IME explanations, while finding IME's explanations more faithful and trustworthy.

PDF Abstract

ETT

ETT