Homepage2Vec: Language-Agnostic Website Embedding and Classification

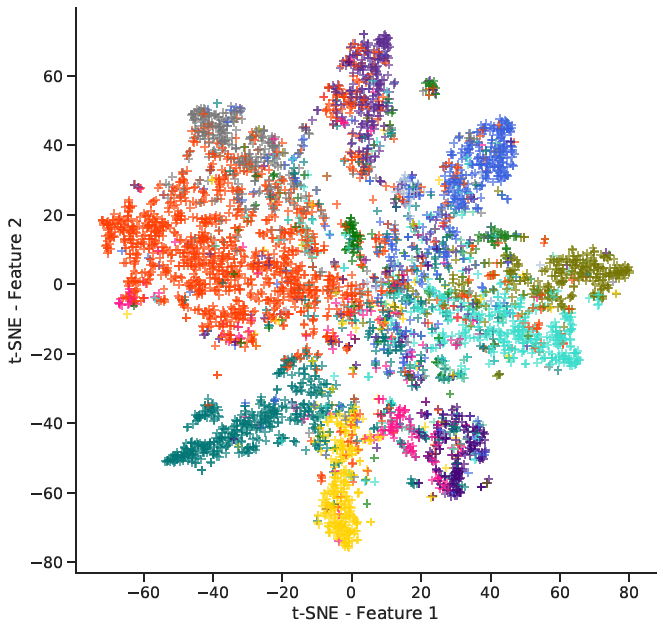

Currently, publicly available models for website classification do not offer an embedding method and have limited support for languages beyond English. We release a dataset of more than two million category-labeled websites in 92 languages collected from Curlie, the largest multilingual human-edited Web directory. The dataset contains 14 website categories aligned across languages. Alongside it, we introduce Homepage2Vec, a machine-learned pre-trained model for classifying and embedding websites based on their homepage in a language-agnostic way. Homepage2Vec, thanks to its feature set (textual content, metadata tags, and visual attributes) and recent progress in natural language representation, is language-independent by design and generates embedding-based representations. We show that Homepage2Vec correctly classifies websites with a macro-averaged F1-score of 0.90, with stable performance across low- as well as high-resource languages. Feature analysis shows that a small subset of efficiently computable features suffices to achieve high performance even with limited computational resources. We make publicly available the curated Curlie dataset aligned across languages, the pre-trained Homepage2Vec model, and libraries

PDF AbstractCode

Tasks

Datasets

Introduced in the Paper:

Curlie