Language Model Pre-Training with Sparse Latent Typing

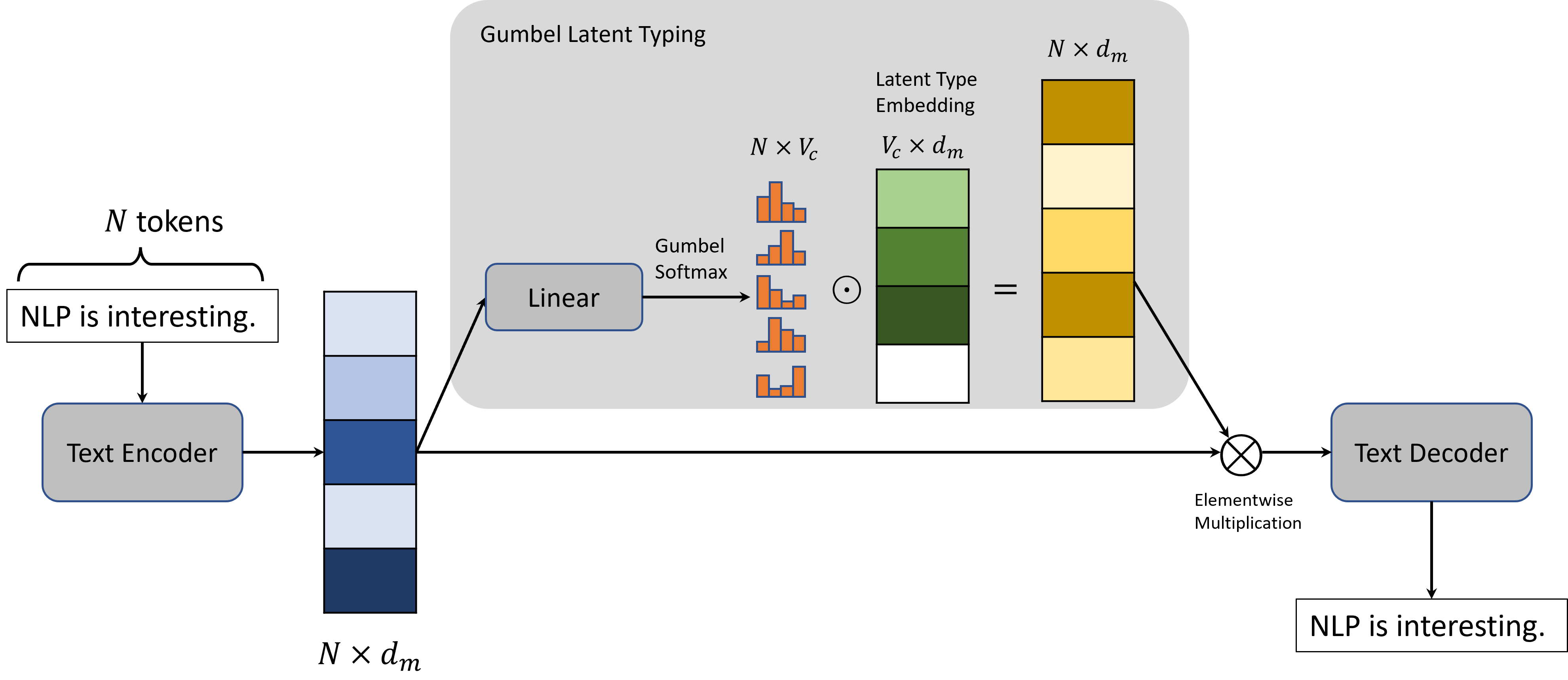

Modern large-scale Pre-trained Language Models (PLMs) have achieved tremendous success on a wide range of downstream tasks. However, most of the LM pre-training objectives only focus on text reconstruction, but have not sought to learn latent-level interpretable representations of sentences. In this paper, we manage to push the language models to obtain a deeper understanding of sentences by proposing a new pre-training objective, Sparse Latent Typing, which enables the model to sparsely extract sentence-level keywords with diverse latent types. Experimental results show that our model is able to learn interpretable latent type categories in a self-supervised manner without using any external knowledge. Besides, the language model pre-trained with such an objective also significantly improves Information Extraction related downstream tasks in both supervised and few-shot settings. Our code is publicly available at: https://github.com/renll/SparseLT.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #6 on

Few-shot NER

on Few-NERD (INTRA)

(using extra training data)

Ranked #6 on

Few-shot NER

on Few-NERD (INTRA)

(using extra training data)

Few-NERD

Few-NERD