Leaner and Faster: Two-Stage Model Compression for Lightweight Text-Image Retrieval

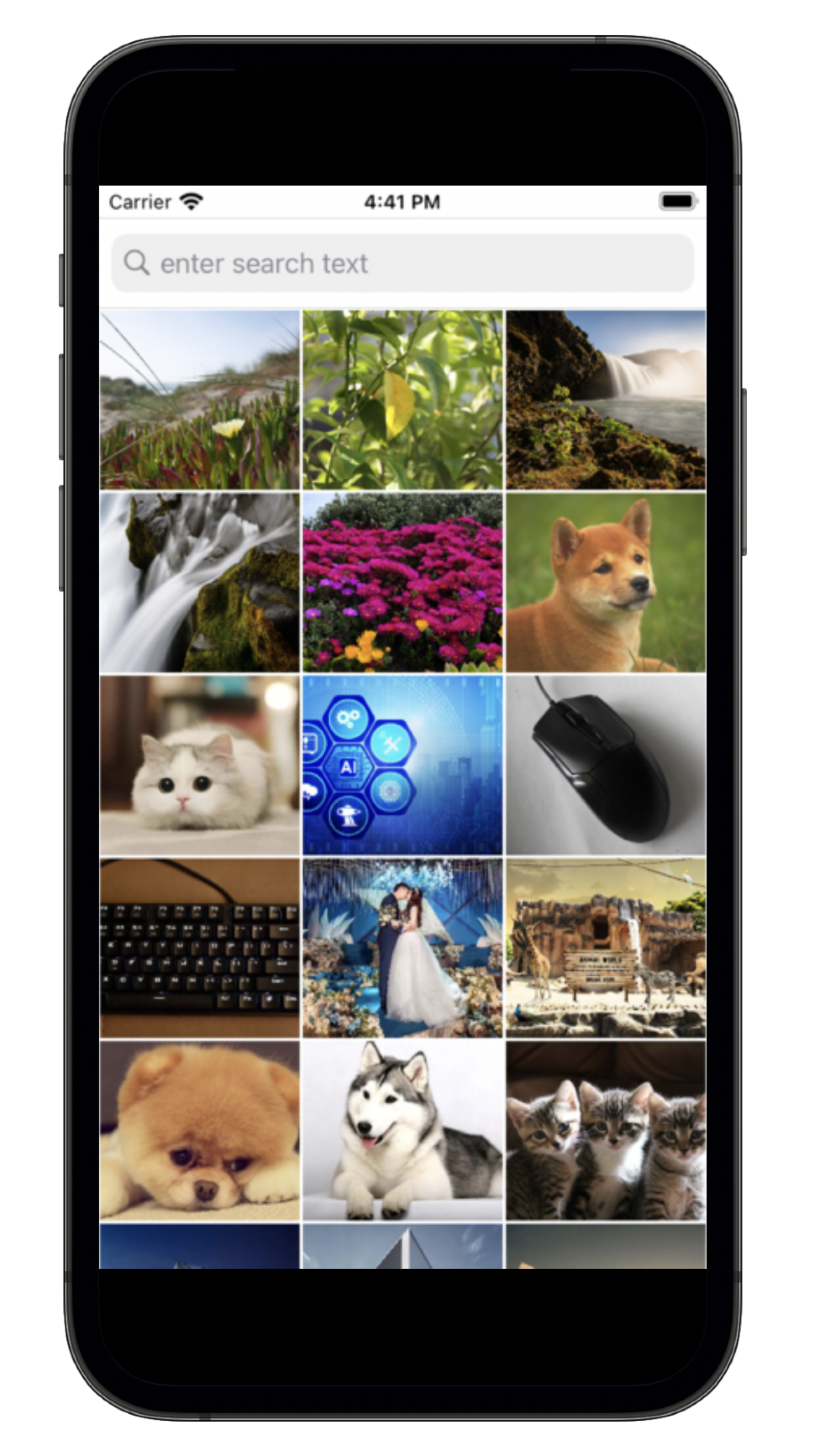

Current text-image approaches (e.g., CLIP) typically adopt dual-encoder architecture us- ing pre-trained vision-language representation. However, these models still pose non-trivial memory requirements and substantial incre- mental indexing time, which makes them less practical on mobile devices. In this paper, we present an effective two-stage framework to compress large pre-trained dual-encoder for lightweight text-image retrieval. The result- ing model is smaller (39% of the original), faster (1.6x/2.9x for processing image/text re- spectively), yet performs on par with or bet- ter than the original full model on Flickr30K and MSCOCO benchmarks. We also open- source an accompanying realistic mobile im- age search application.

PDF Abstract NAACL 2022 PDF NAACL 2022 Abstract

MS COCO

MS COCO