Learned Queries for Efficient Local Attention

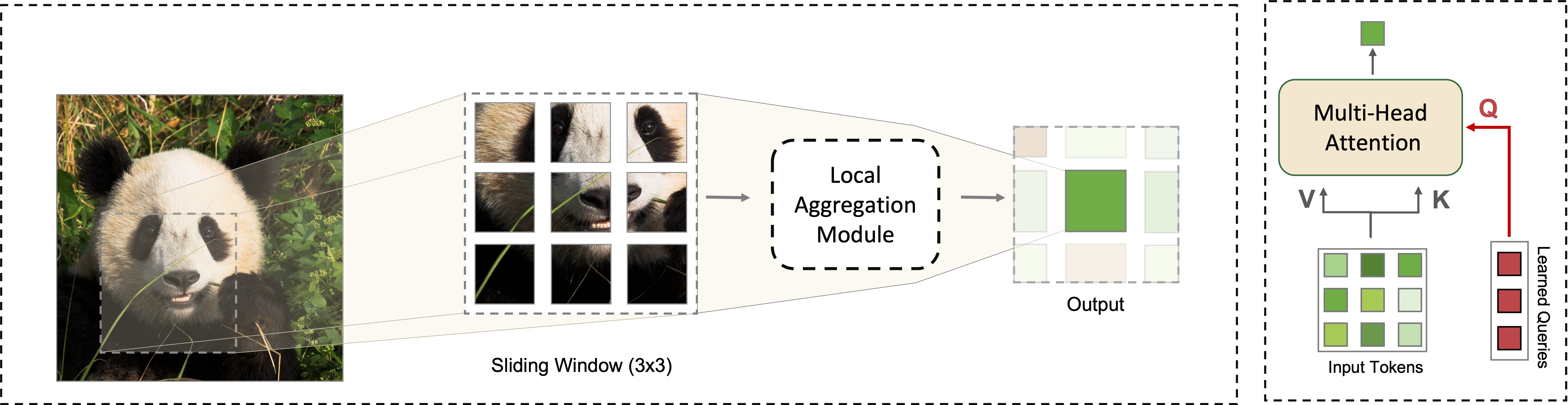

Vision Transformers (ViT) serve as powerful vision models. Unlike convolutional neural networks, which dominated vision research in previous years, vision transformers enjoy the ability to capture long-range dependencies in the data. Nonetheless, an integral part of any transformer architecture, the self-attention mechanism, suffers from high latency and inefficient memory utilization, making it less suitable for high-resolution input images. To alleviate these shortcomings, hierarchical vision models locally employ self-attention on non-interleaving windows. This relaxation reduces the complexity to be linear in the input size; however, it limits the cross-window interaction, hurting the model performance. In this paper, we propose a new shift-invariant local attention layer, called query and attend (QnA), that aggregates the input locally in an overlapping manner, much like convolutions. The key idea behind QnA is to introduce learned queries, which allow fast and efficient implementation. We verify the effectiveness of our layer by incorporating it into a hierarchical vision transformer model. We show improvements in speed and memory complexity while achieving comparable accuracy with state-of-the-art models. Finally, our layer scales especially well with window size, requiring up-to x10 less memory while being up-to x5 faster than existing methods. The code is publicly available at \url{https://github.com/moabarar/qna}.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Image Classification | ImageNet | QnA-ViT-Base | Top 1 Accuracy | 83.7% | # 365 | |

| Number of params | 56M | # 748 | ||||

| GFLOPs | 9.7 | # 294 | ||||

| Image Classification | ImageNet | QnA-ViT-Small | Top 1 Accuracy | 83.2% | # 413 | |

| Number of params | 25M | # 587 | ||||

| GFLOPs | 4.4 | # 208 | ||||

| Image Classification | ImageNet | QnA-ViT-Tiny | Top 1 Accuracy | 81.7% | # 563 | |

| Number of params | 16M | # 519 | ||||

| GFLOPs | 2.5 | # 161 |

ImageNet

ImageNet

MS COCO

MS COCO

CelebA

CelebA