Learning Certified Individually Fair Representations

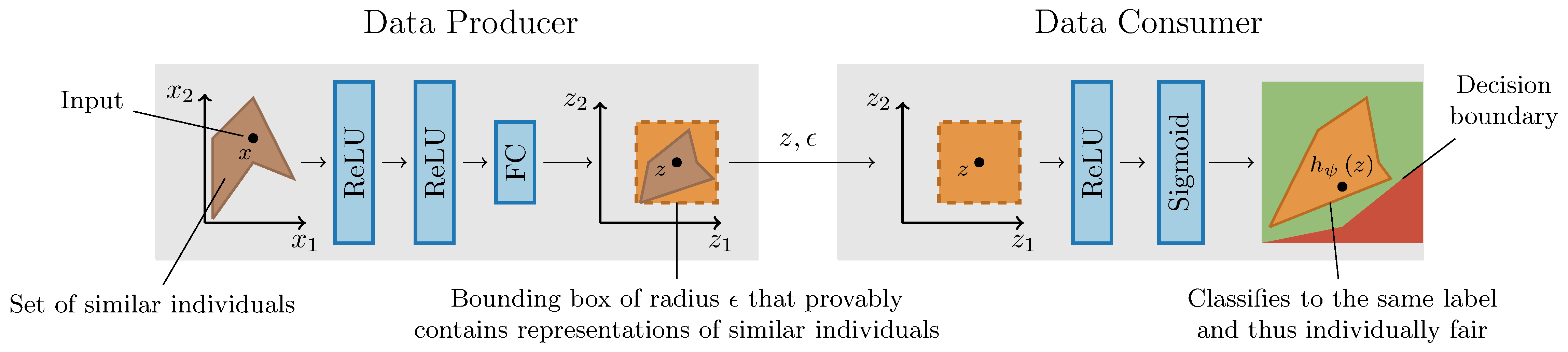

Fair representation learning provides an effective way of enforcing fairness constraints without compromising utility for downstream users. A desirable family of such fairness constraints, each requiring similar treatment for similar individuals, is known as individual fairness. In this work, we introduce the first method that enables data consumers to obtain certificates of individual fairness for existing and new data points. The key idea is to map similar individuals to close latent representations and leverage this latent proximity to certify individual fairness. That is, our method enables the data producer to learn and certify a representation where for a data point all similar individuals are at $\ell_\infty$-distance at most $\epsilon$, thus allowing data consumers to certify individual fairness by proving $\epsilon$-robustness of their classifier. Our experimental evaluation on five real-world datasets and several fairness constraints demonstrates the expressivity and scalability of our approach.

PDF Abstract NeurIPS 2020 PDF NeurIPS 2020 Abstract