Learning deep representations by mutual information estimation and maximization

In this work, we perform unsupervised learning of representations by maximizing mutual information between an input and the output of a deep neural network encoder. Importantly, we show that structure matters: incorporating knowledge about locality of the input to the objective can greatly influence a representation's suitability for downstream tasks. We further control characteristics of the representation by matching to a prior distribution adversarially. Our method, which we call Deep InfoMax (DIM), outperforms a number of popular unsupervised learning methods and competes with fully-supervised learning on several classification tasks. DIM opens new avenues for unsupervised learning of representations and is an important step towards flexible formulations of representation-learning objectives for specific end-goals.

PDF Abstract ICLR 2019 PDF ICLR 2019 Abstract

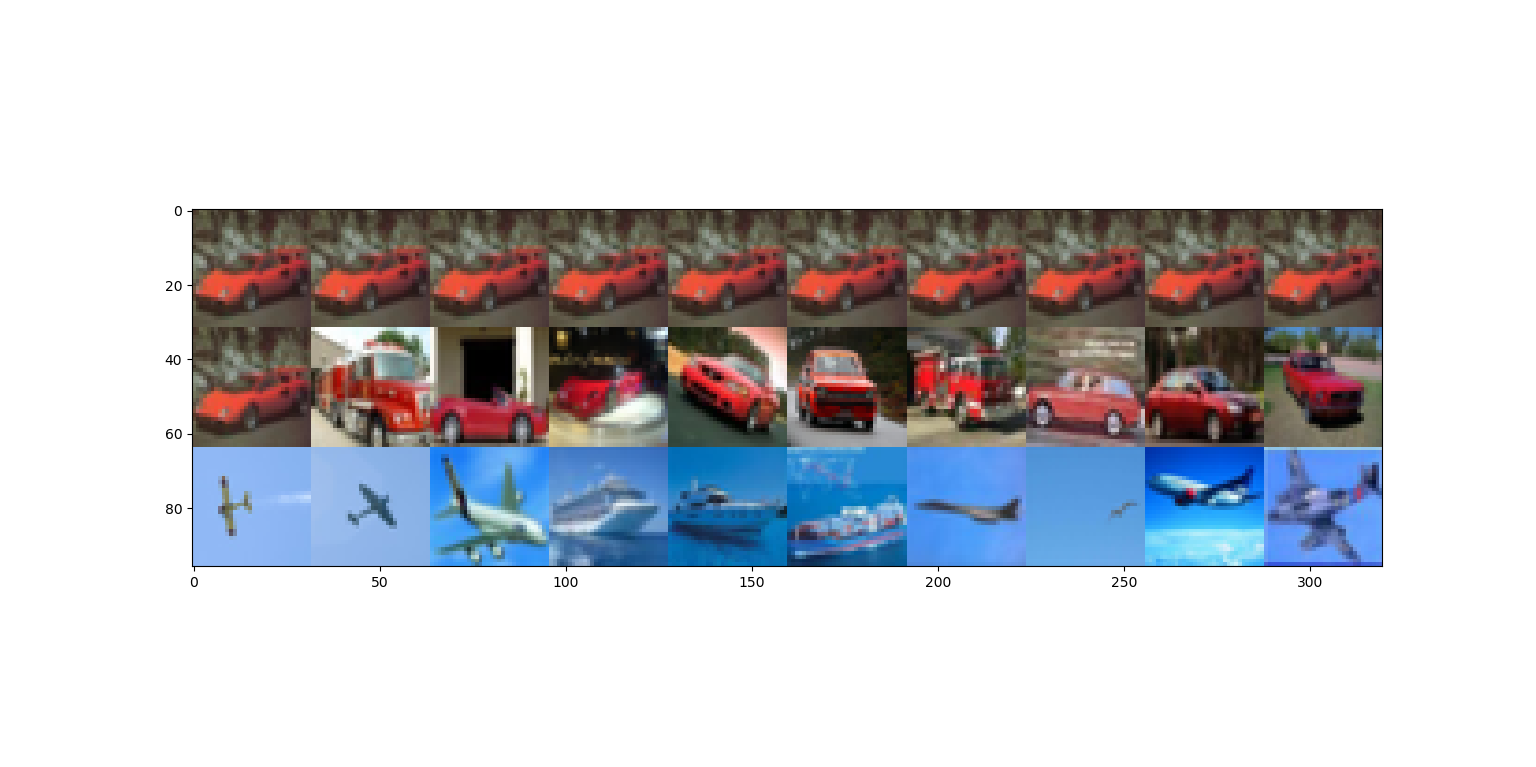

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

CelebA

CelebA

STL-10

STL-10