Learning-Rate-Free Learning by D-Adaptation

D-Adaptation is an approach to automatically setting the learning rate which asymptotically achieves the optimal rate of convergence for minimizing convex Lipschitz functions, with no back-tracking or line searches, and no additional function value or gradient evaluations per step. Our approach is the first hyper-parameter free method for this class without additional multiplicative log factors in the convergence rate. We present extensive experiments for SGD and Adam variants of our method, where the method automatically matches hand-tuned learning rates across more than a dozen diverse machine learning problems, including large-scale vision and language problems. An open-source implementation is available.

PDF Abstract

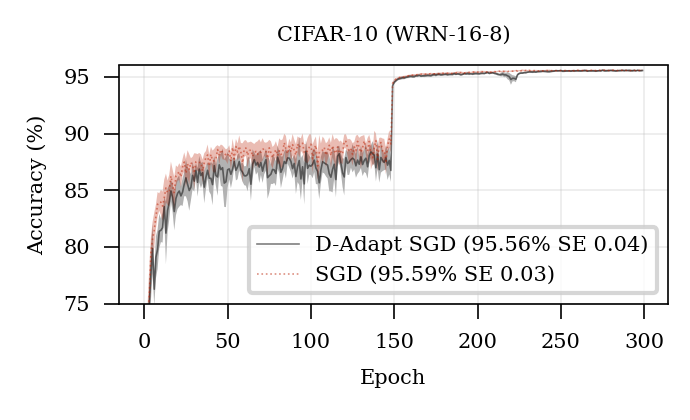

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

fastMRI

fastMRI