Learning Representations that Support Robust Transfer of Predictors

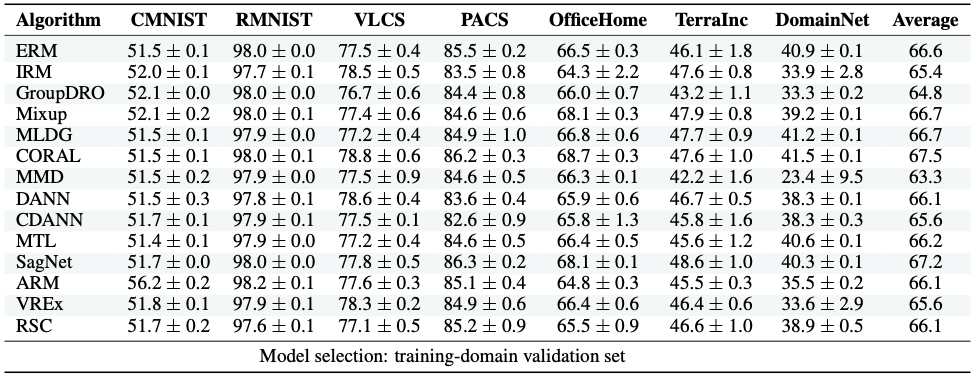

Ensuring generalization to unseen environments remains a challenge. Domain shift can lead to substantially degraded performance unless shifts are well-exercised within the available training environments. We introduce a simple robust estimation criterion -- transfer risk -- that is specifically geared towards optimizing transfer to new environments. Effectively, the criterion amounts to finding a representation that minimizes the risk of applying any optimal predictor trained on one environment to another. The transfer risk essentially decomposes into two terms, a direct transfer term and a weighted gradient-matching term arising from the optimality of per-environment predictors. Although inspired by IRM, we show that transfer risk serves as a better out-of-distribution generalization criterion, both theoretically and empirically. We further demonstrate the impact of optimizing such transfer risk on two controlled settings, each representing a different pattern of environment shift, as well as on two real-world datasets. Experimentally, the approach outperforms baselines across various out-of-distribution generalization tasks. Code is available at \url{https://github.com/Newbeeer/TRM}.

PDF Abstract

MS COCO

MS COCO

Office-Home

Office-Home

PACS

PACS