Learning to Make Predictions on Graphs with Autoencoders

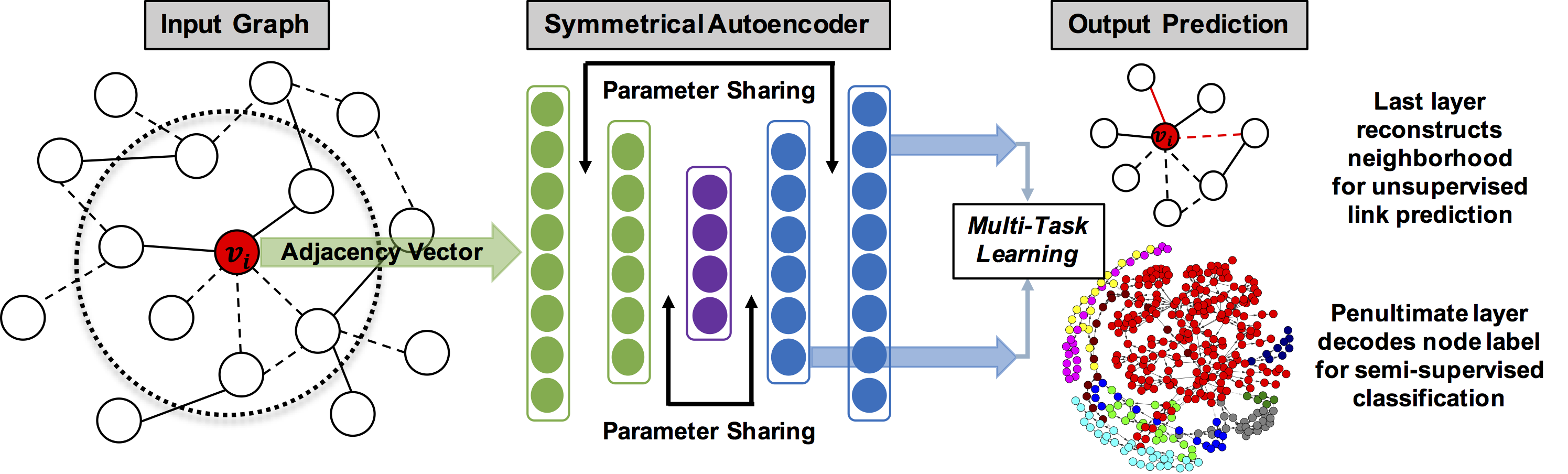

We examine two fundamental tasks associated with graph representation learning: link prediction and semi-supervised node classification. We present a novel autoencoder architecture capable of learning a joint representation of both local graph structure and available node features for the multi-task learning of link prediction and node classification. Our autoencoder architecture is efficiently trained end-to-end in a single learning stage to simultaneously perform link prediction and node classification, whereas previous related methods require multiple training steps that are difficult to optimize. We provide a comprehensive empirical evaluation of our models on nine benchmark graph-structured datasets and demonstrate significant improvement over related methods for graph representation learning. Reference code and data are available at https://github.com/vuptran/graph-representation-learning

PDF Abstract

Cora

Cora