LGGNet: Learning from Local-Global-Graph Representations for Brain-Computer Interface

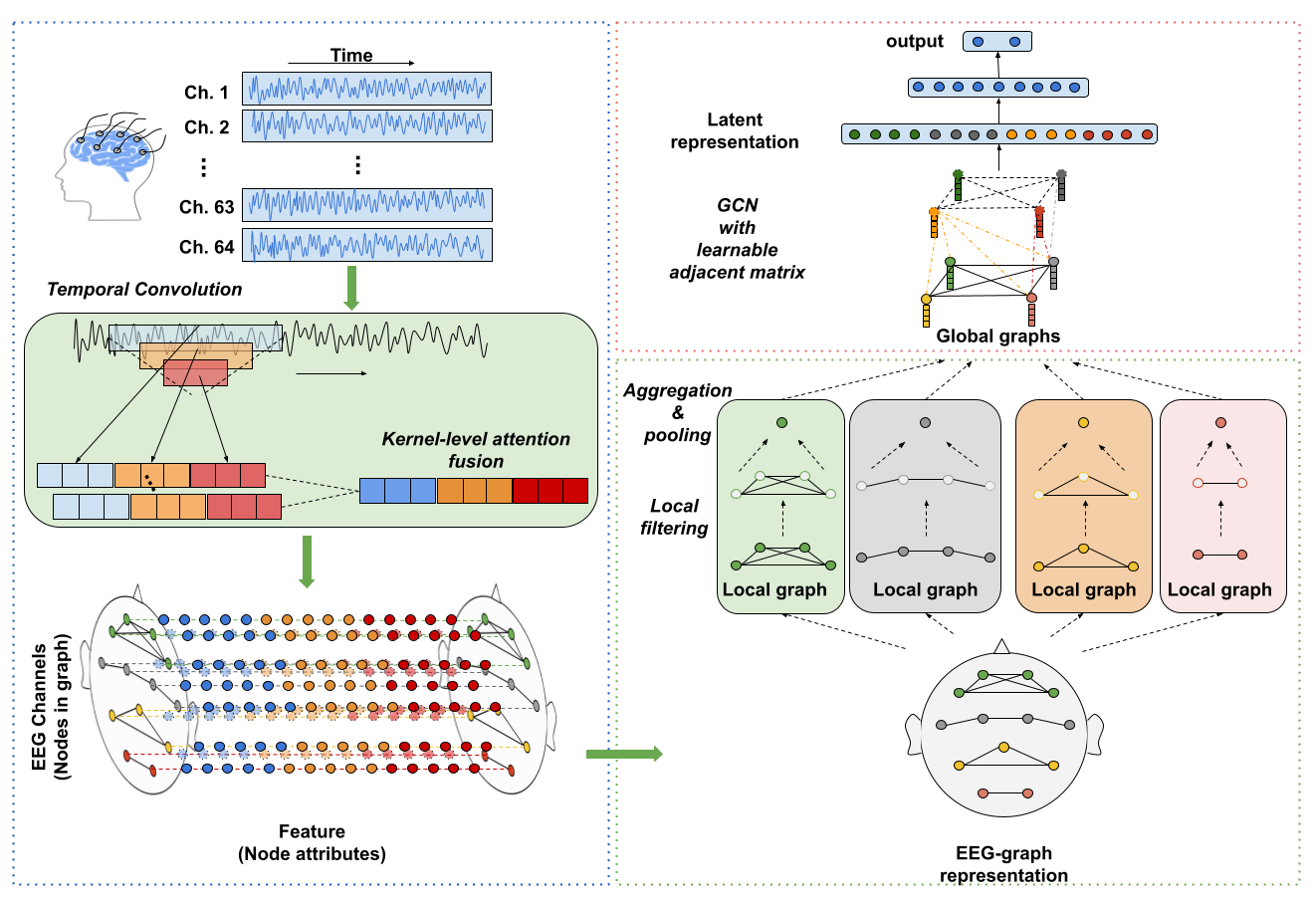

Neuropsychological studies suggest that co-operative activities among different brain functional areas drive high-level cognitive processes. To learn the brain activities within and among different functional areas of the brain, we propose LGGNet, a novel neurologically inspired graph neural network, to learn local-global-graph representations of electroencephalography (EEG) for Brain-Computer Interface (BCI). The input layer of LGGNet comprises a series of temporal convolutions with multi-scale 1D convolutional kernels and kernel-level attentive fusion. It captures temporal dynamics of EEG which then serves as input to the proposed local and global graph-filtering layers. Using a defined neurophysiologically meaningful set of local and global graphs, LGGNet models the complex relations within and among functional areas of the brain. Under the robust nested cross-validation settings, the proposed method is evaluated on three publicly available datasets for four types of cognitive classification tasks, namely, the attention, fatigue, emotion, and preference classification tasks. LGGNet is compared with state-of-the-art methods, such as DeepConvNet, EEGNet, R2G-STNN, TSception, RGNN, AMCNN-DGCN, HRNN and GraphNet. The results show that LGGNet outperforms these methods, and the improvements are statistically significant (p<0.05) in most cases. The results show that bringing neuroscience prior knowledge into neural network design yields an improvement of classification performance. The source code can be found at https://github.com/yi-ding-cs/LGG

PDF Abstract