LiSHT: Non-Parametric Linearly Scaled Hyperbolic Tangent Activation Function for Neural Networks

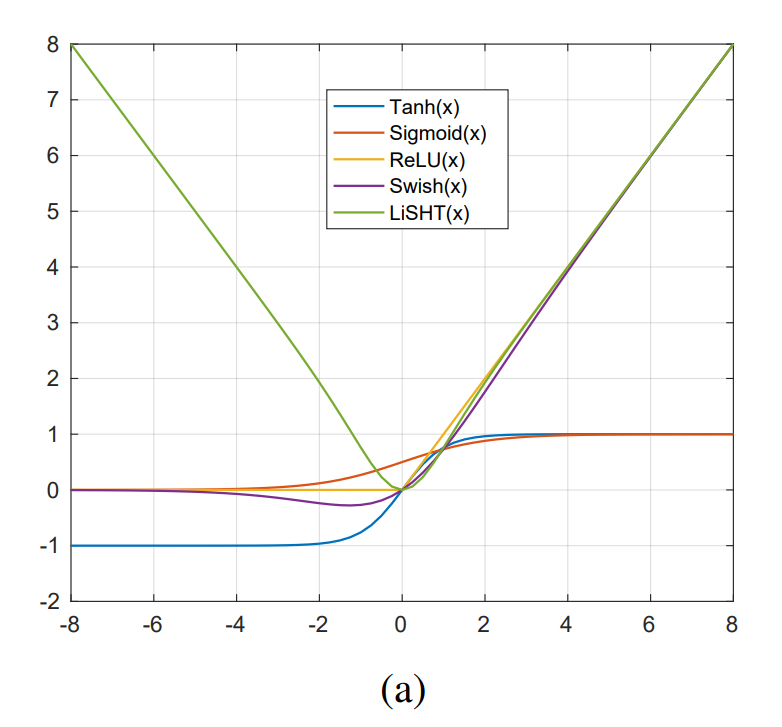

The activation function in neural network introduces the non-linearity required to deal with the complex tasks. Several activation/non-linearity functions are developed for deep learning models. However, most of the existing activation functions suffer due to the dying gradient problem and non-utilization of the large negative input values. In this paper, we propose a Linearly Scaled Hyperbolic Tangent (LiSHT) for Neural Networks (NNs) by scaling the Tanh linearly. The proposed LiSHT is non-parametric and tackles the dying gradient problem. We perform the experiments on benchmark datasets of different type, such as vector data, image data and natural language data. We observe the superior performance using Multi-layer Perceptron (MLP), Residual Network (ResNet) and Long-short term memory (LSTM) for data classification, image classification and tweets classification tasks, respectively. The accuracy on CIFAR100 dataset using ResNet model with LiSHT is improved by 9.48, 3.40, 3.16, 4.26, and 1.17\% as compared to Tanh, ReLU, PReLU, LReLU, and Swish, respectively. We also show the qualitative results using loss landscape, weight distribution and activations maps in support of the proposed activation function.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

MNIST

MNIST