Meshing Point Clouds with Predicted Intrinsic-Extrinsic Ratio Guidance

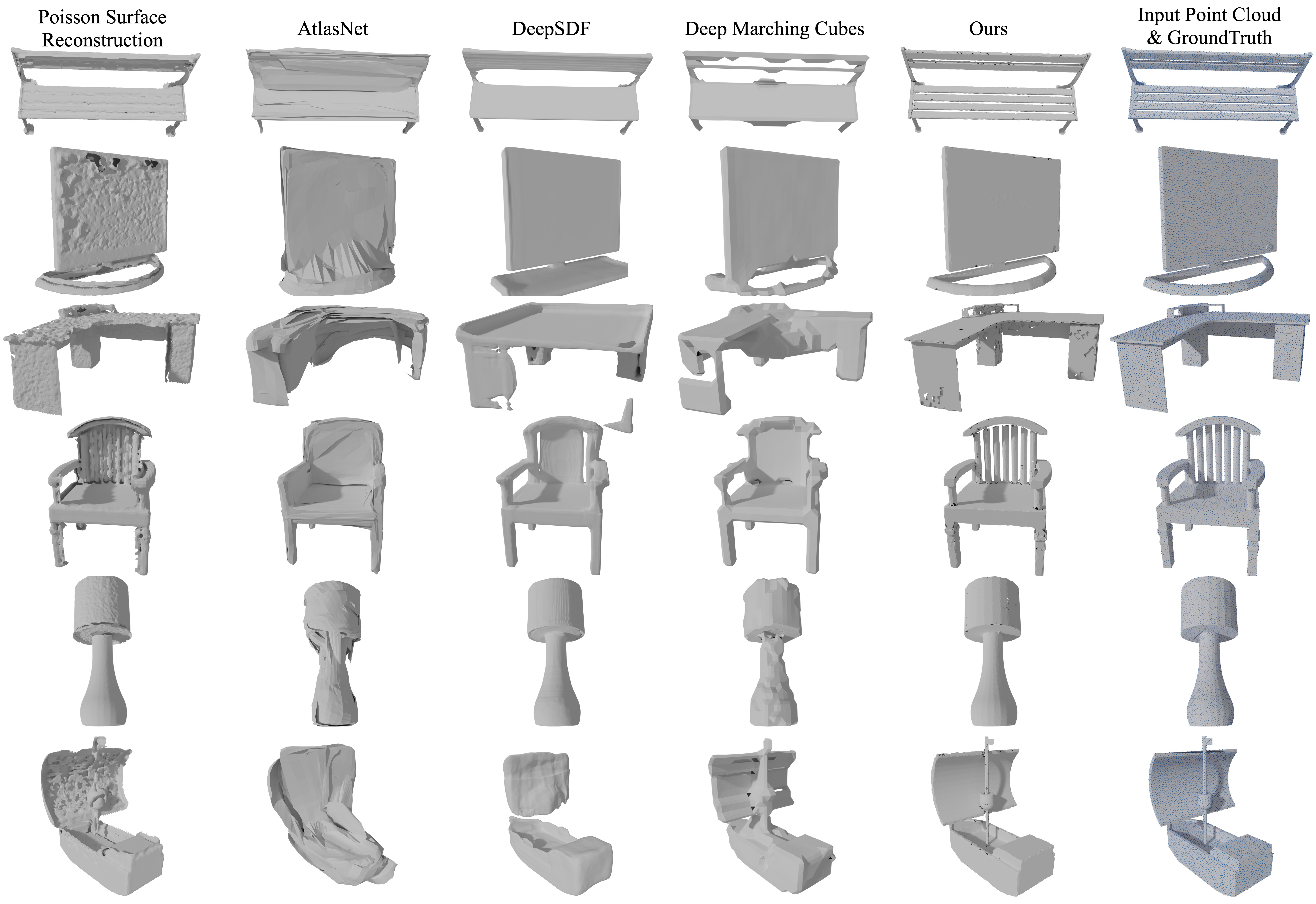

We are interested in reconstructing the mesh representation of object surfaces from point clouds. Surface reconstruction is a prerequisite for downstream applications such as rendering, collision avoidance for planning, animation, etc. However, the task is challenging if the input point cloud has a low resolution, which is common in real-world scenarios (e.g., from LiDAR or Kinect sensors). Existing learning-based mesh generative methods mostly predict the surface by first building a shape embedding that is at the whole object level, a design that causes issues in generating fine-grained details and generalizing to unseen categories. Instead, we propose to leverage the input point cloud as much as possible, by only adding connectivity information to existing points. Particularly, we predict which triplets of points should form faces. Our key innovation is a surrogate of local connectivity, calculated by comparing the intrinsic/extrinsic metrics. We learn to predict this surrogate using a deep point cloud network and then feed it to an efficient post-processing module for high-quality mesh generation. We demonstrate that our method can not only preserve details, handle ambiguous structures, but also possess strong generalizability to unseen categories by experiments on synthetic and real data. The code is available at https://github.com/Colin97/Point2Mesh.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

ShapeNet

ShapeNet