Meta-RangeSeg: LiDAR Sequence Semantic Segmentation Using Multiple Feature Aggregation

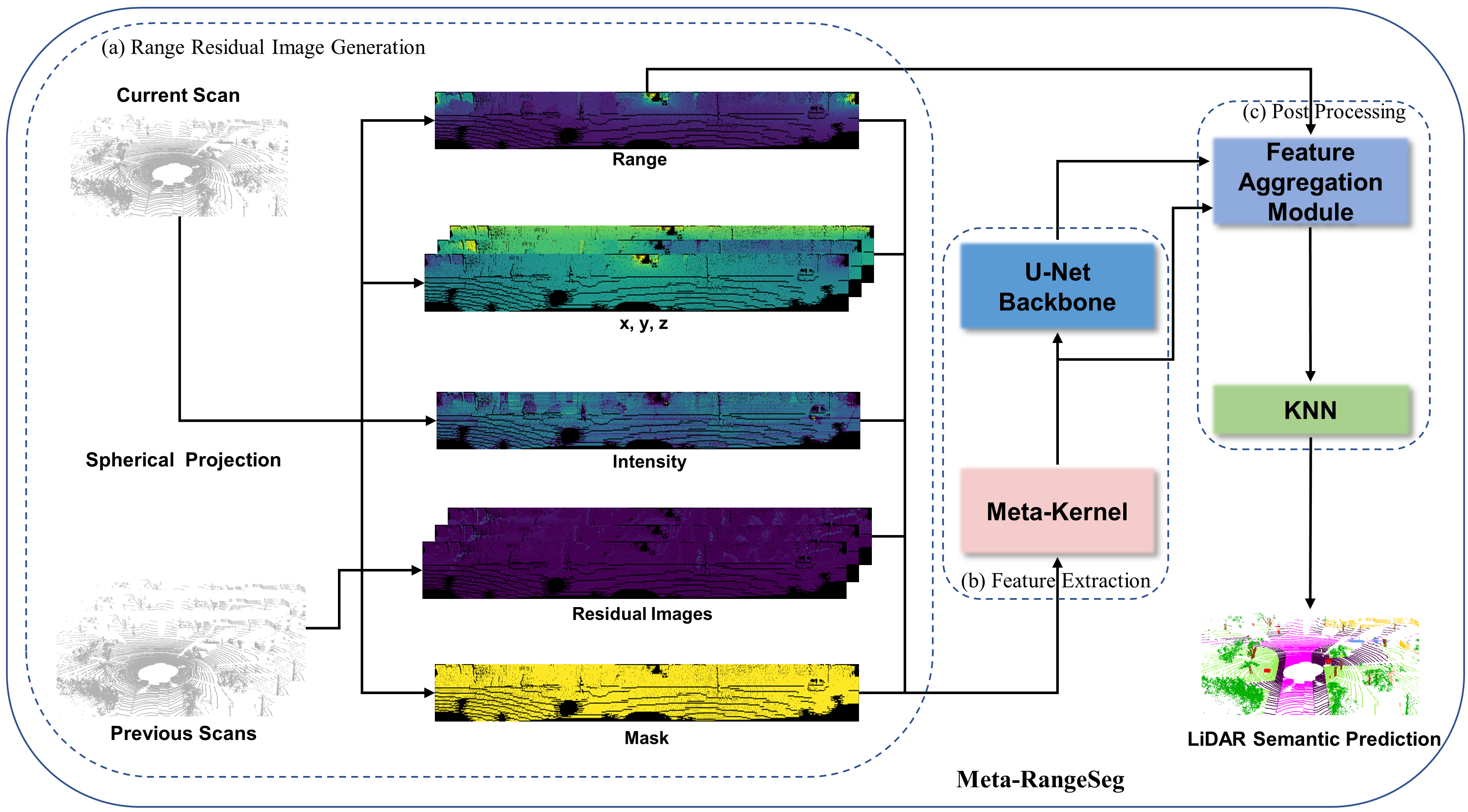

LiDAR sensor is essential to the perception system in autonomous vehicles and intelligent robots. To fulfill the real-time requirements in real-world applications, it is necessary to efficiently segment the LiDAR scans. Most of previous approaches directly project 3D point cloud onto the 2D spherical range image so that they can make use of the efficient 2D convolutional operations for image segmentation. Although having achieved the encouraging results, the neighborhood information is not well-preserved in the spherical projection. Moreover, the temporal information is not taken into consideration in the single scan segmentation task. To tackle these problems, we propose a novel approach to semantic segmentation for LiDAR sequences named Meta-RangeSeg, where a new range residual image representation is introduced to capture the spatial-temporal information. Specifically, Meta-Kernel is employed to extract the meta features, which reduces the inconsistency between the 2D range image coordinates input and 3D Cartesian coordinates output. An efficient U-Net backbone is used to obtain the multi-scale features. Furthermore, Feature Aggregation Module (FAM) strengthens the role of range channel and aggregates features at different levels. We have conducted extensive experiments for performance evaluation on SemanticKITTI and SemanticPOSS. The promising results show that our proposed Meta-RangeSeg method is more efficient and effective than the existing approaches. Our full implementation is publicly available at https://github.com/songw-zju/Meta-RangeSeg .

PDF Abstract

SemanticKITTI

SemanticKITTI

SemanticPOSS

SemanticPOSS