Deep Co-Training with Task Decomposition for Semi-Supervised Domain Adaptation

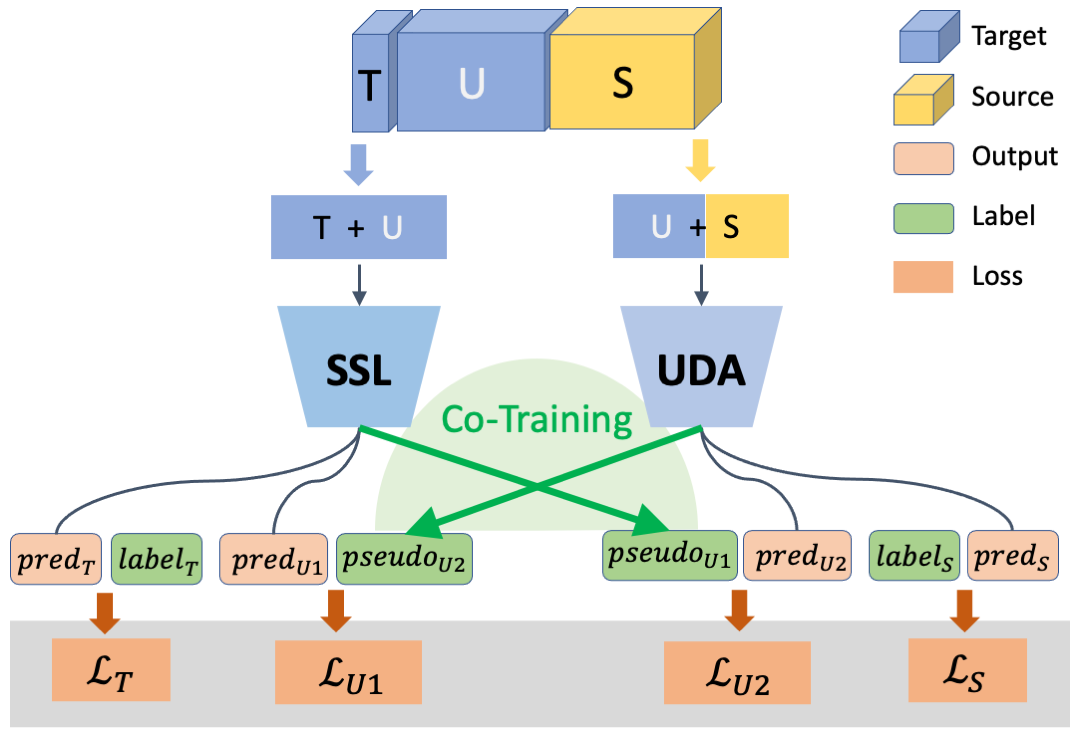

Semi-supervised domain adaptation (SSDA) aims to adapt models trained from a labeled source domain to a different but related target domain, from which unlabeled data and a small set of labeled data are provided. Current methods that treat source and target supervision without distinction overlook their inherent discrepancy, resulting in a source-dominated model that has not effectively used the target supervision. In this paper, we argue that the labeled target data needs to be distinguished for effective SSDA, and propose to explicitly decompose the SSDA task into two sub-tasks: a semi-supervised learning (SSL) task in the target domain and an unsupervised domain adaptation (UDA) task across domains. By doing so, the two sub-tasks can better leverage the corresponding supervision and thus yield very different classifiers. To integrate the strengths of the two classifiers, we apply the well-established co-training framework, in which the two classifiers exchange their high confident predictions to iteratively "teach each other" so that both classifiers can excel in the target domain. We call our approach Deep Co-training with Task decomposition (DeCoTa). DeCoTa requires no adversarial training and is easy to implement. Moreover, DeCoTa is well-founded on the theoretical condition of when co-training would succeed. As a result, DeCoTa achieves state-of-the-art results on several SSDA datasets, outperforming the prior art by a notable 4% margin on DomainNet. Code is available at https://github.com/LoyoYang/DeCoTa

PDF Abstract ICCV 2021 PDF ICCV 2021 Abstract

Office-Home

Office-Home

DomainNet

DomainNet