Minimum sharpness: Scale-invariant parameter-robustness of neural networks

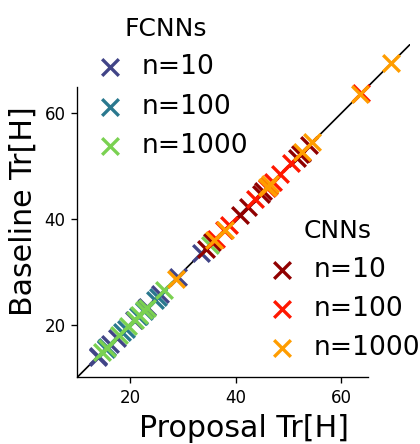

Toward achieving robust and defensive neural networks, the robustness against the weight parameters perturbations, i.e., sharpness, attracts attention in recent years (Sun et al., 2020). However, sharpness is known to remain a critical issue, "scale-sensitivity." In this paper, we propose a novel sharpness measure, Minimum Sharpness. It is known that NNs have a specific scale transformation that constitutes equivalent classes where functional properties are completely identical, and at the same time, their sharpness could change unlimitedly. We define our sharpness through a minimization problem over the equivalent NNs being invariant to the scale transformation. We also develop an efficient and exact technique to make the sharpness tractable, which reduces the heavy computational costs involved with Hessian. In the experiment, we observed that our sharpness has a valid correlation with the generalization of NNs and runs with less computational cost than existing sharpness measures.

PDF Abstract