MiniViT: Compressing Vision Transformers with Weight Multiplexing

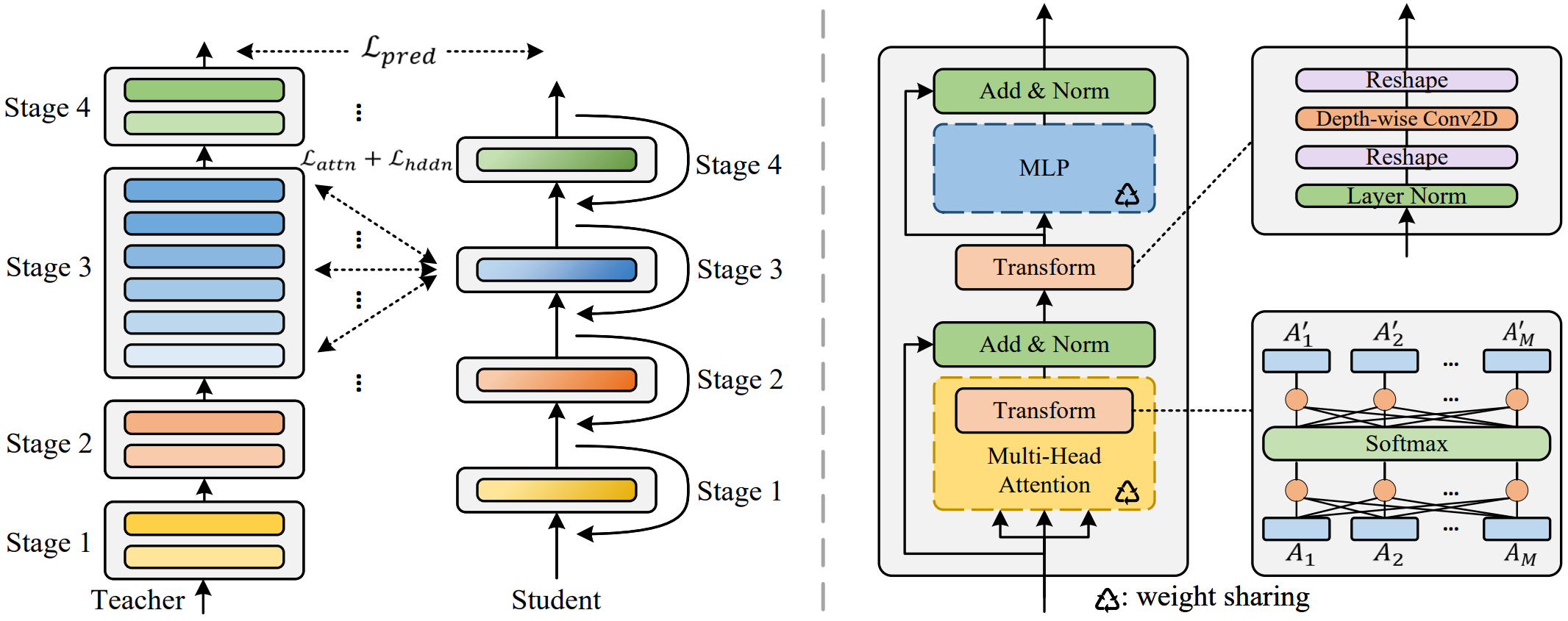

Vision Transformer (ViT) models have recently drawn much attention in computer vision due to their high model capability. However, ViT models suffer from huge number of parameters, restricting their applicability on devices with limited memory. To alleviate this problem, we propose MiniViT, a new compression framework, which achieves parameter reduction in vision transformers while retaining the same performance. The central idea of MiniViT is to multiplex the weights of consecutive transformer blocks. More specifically, we make the weights shared across layers, while imposing a transformation on the weights to increase diversity. Weight distillation over self-attention is also applied to transfer knowledge from large-scale ViT models to weight-multiplexed compact models. Comprehensive experiments demonstrate the efficacy of MiniViT, showing that it can reduce the size of the pre-trained Swin-B transformer by 48\%, while achieving an increase of 1.0\% in Top-1 accuracy on ImageNet. Moreover, using a single-layer of parameters, MiniViT is able to compress DeiT-B by 9.7 times from 86M to 9M parameters, without seriously compromising the performance. Finally, we verify the transferability of MiniViT by reporting its performance on downstream benchmarks. Code and models are available at here.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Tasks

Datasets

Results from the Paper

Ranked #209 on

Image Classification

on ImageNet

(using extra training data)

Ranked #209 on

Image Classification

on ImageNet

(using extra training data)

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

CIFAR-100

CIFAR-100

Stanford Cars

Stanford Cars