MobiFace: A Novel Dataset for Mobile Face Tracking in the Wild

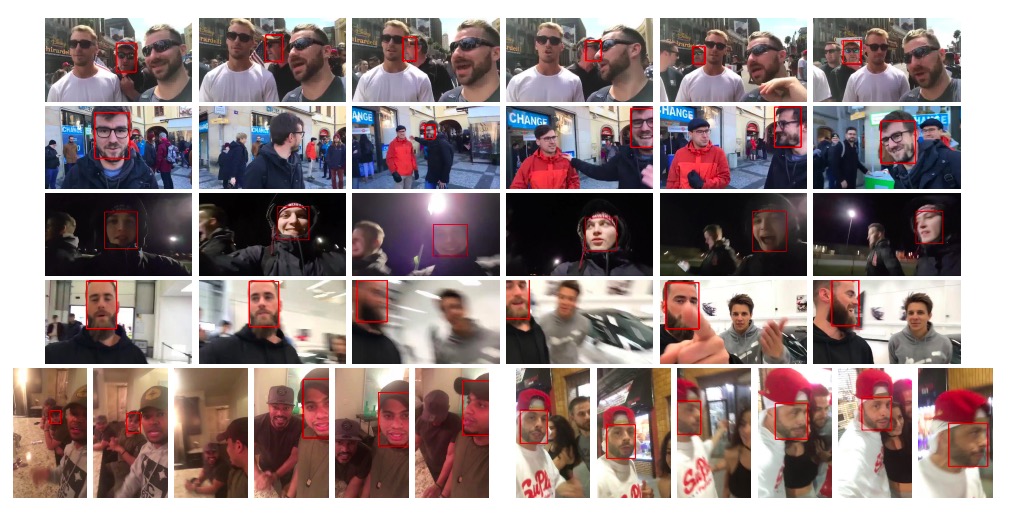

Face tracking serves as the crucial initial step in mobile applications trying to analyse target faces over time in mobile settings. However, this problem has received little attention, mainly due to the scarcity of dedicated face tracking benchmarks. In this work, we introduce MobiFace, the first dataset for single face tracking in mobile situations. It consists of 80 unedited live-streaming mobile videos captured by 70 different smartphone users in fully unconstrained environments. Over $95K$ bounding boxes are manually labelled. The videos are carefully selected to cover typical smartphone usage. The videos are also annotated with 14 attributes, including 6 newly proposed attributes and 8 commonly seen in object tracking. 36 state-of-the-art trackers, including facial landmark trackers, generic object trackers and trackers that we have fine-tuned or improved, are evaluated. The results suggest that mobile face tracking cannot be solved through existing approaches. In addition, we show that fine-tuning on the MobiFace training data significantly boosts the performance of deep learning-based trackers, suggesting that MobiFace captures the unique characteristics of mobile face tracking. Our goal is to offer the community a diverse dataset to enable the design and evaluation of mobile face trackers. The dataset, annotations and the evaluation server will be on \url{https://mobiface.github.io/}.

PDF Abstract

MobiFace

MobiFace

OTB

OTB