ModaNet: A Large-Scale Street Fashion Dataset with Polygon Annotations

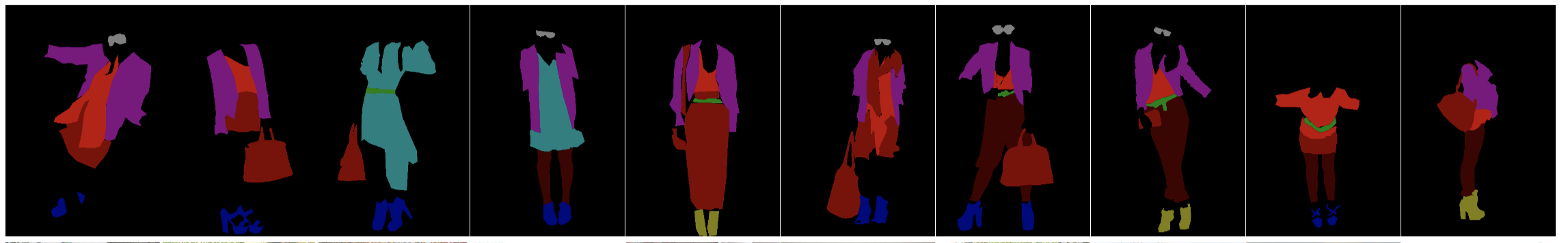

Understanding clothes from a single image has strong commercial and cultural impacts on modern societies. However, this task remains a challenging computer vision problem due to wide variations in the appearance, style, brand and layering of clothing items. We present a new database called ModaNet, a large-scale collection of images based on Paperdoll dataset. Our dataset provides 55,176 street images, fully annotated with polygons on top of the 1 million weakly annotated street images in Paperdoll. ModaNet aims to provide a technical benchmark to fairly evaluate the progress of applying the latest computer vision techniques that rely on large data for fashion understanding. The rich annotation of the dataset allows to measure the performance of state-of-the-art algorithms for object detection, semantic segmentation and polygon prediction on street fashion images in detail. The polygon-based annotation dataset has been released https://github.com/eBay/modanet, we also host the leaderboard at EvalAI: https://evalai.cloudcv.org/featured-challenges/136/overview.

PDF Abstract

ModaNet

ModaNet

ImageNet

ImageNet

ssd

ssd

DeepFashion

DeepFashion