How to Incorporate Monotonicity in Deep Networks While Preserving Flexibility?

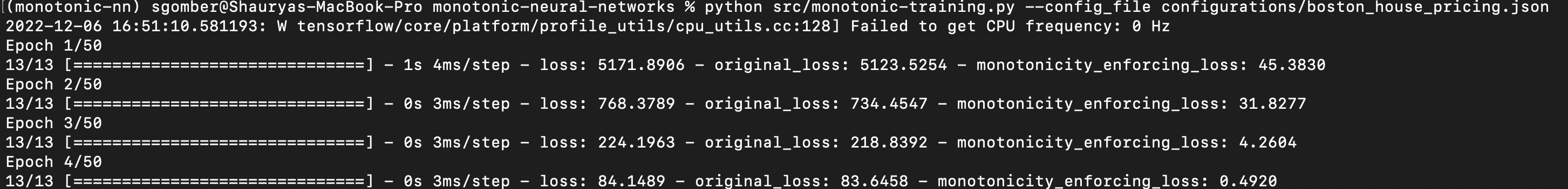

The importance of domain knowledge in enhancing model performance and making reliable predictions in the real-world is critical. This has led to an increased focus on specific model properties for interpretability. We focus on incorporating monotonic trends, and propose a novel gradient-based point-wise loss function for enforcing partial monotonicity with deep neural networks. While recent developments have relied on structural changes to the model, our approach aims at enhancing the learning process. Our model-agnostic point-wise loss function acts as a plug-in to the standard loss and penalizes non-monotonic gradients. We demonstrate that the point-wise loss produces comparable (and sometimes better) results on both AUC and monotonicity measure, as opposed to state-of-the-art deep lattice networks that guarantee monotonicity. Moreover, it is able to learn differentiated individual trends and produces smoother conditional curves which are important for personalized decisions, while preserving the flexibility of deep networks.

PDF Abstract