Multi-Scale Contrastive Siamese Networks for Self-Supervised Graph Representation Learning

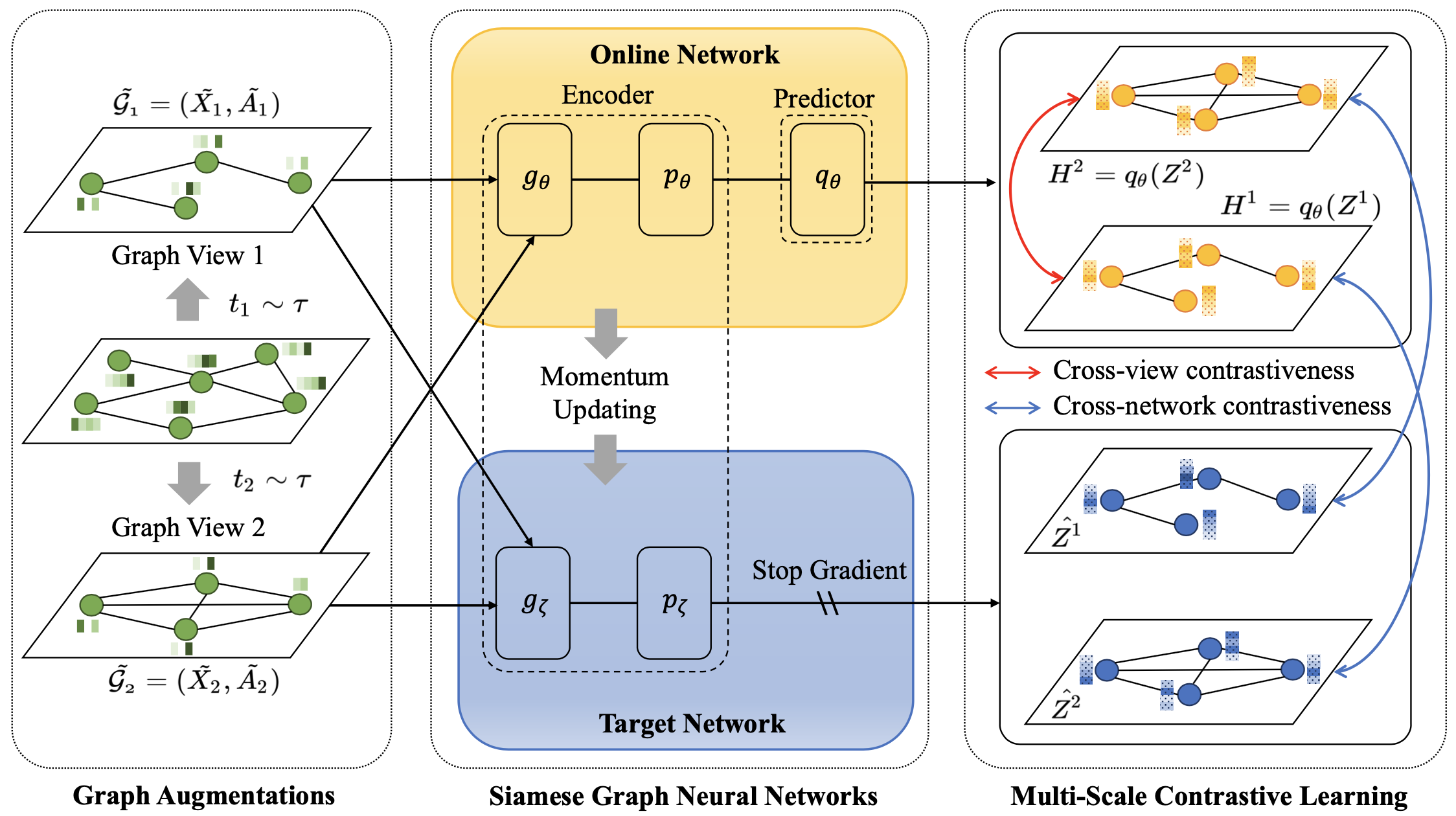

Graph representation learning plays a vital role in processing graph-structured data. However, prior arts on graph representation learning heavily rely on labeling information. To overcome this problem, inspired by the recent success of graph contrastive learning and Siamese networks in visual representation learning, we propose a novel self-supervised approach in this paper to learn node representations by enhancing Siamese self-distillation with multi-scale contrastive learning. Specifically, we first generate two augmented views from the input graph based on local and global perspectives. Then, we employ two objectives called cross-view and cross-network contrastiveness to maximize the agreement between node representations across different views and networks. To demonstrate the effectiveness of our approach, we perform empirical experiments on five real-world datasets. Our method not only achieves new state-of-the-art results but also surpasses some semi-supervised counterparts by large margins. Code is made available at https://github.com/GRAND-Lab/MERIT

PDF Abstract

Cora

Cora