Multi-Task Audio Source Separation

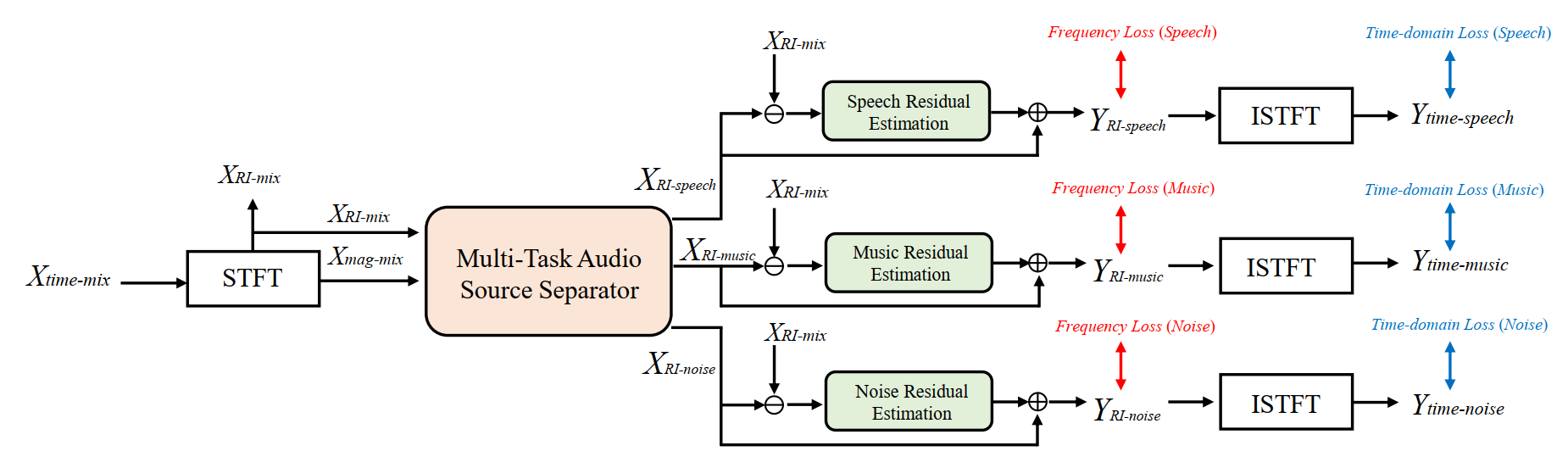

The audio source separation tasks, such as speech enhancement, speech separation, and music source separation, have achieved impressive performance in recent studies. The powerful modeling capabilities of deep neural networks give us hope for more challenging tasks. This paper launches a new multi-task audio source separation (MTASS) challenge to separate the speech, music, and noise signals from the monaural mixture. First, we introduce the details of this task and generate a dataset of mixtures containing speech, music, and background noises. Then, we propose an MTASS model in the complex domain to fully utilize the differences in spectral characteristics of the three audio signals. In detail, the proposed model follows a two-stage pipeline, which separates the three types of audio signals and then performs signal compensation separately. After comparing different training targets, the complex ratio mask is selected as a more suitable target for the MTASS. The experimental results also indicate that the residual signal compensation module helps to recover the signals further. The proposed model shows significant advantages in separation performance over several well-known separation models.

PDF Abstract

AISHELL-1

AISHELL-1