Multi-Task Graph Autoencoders

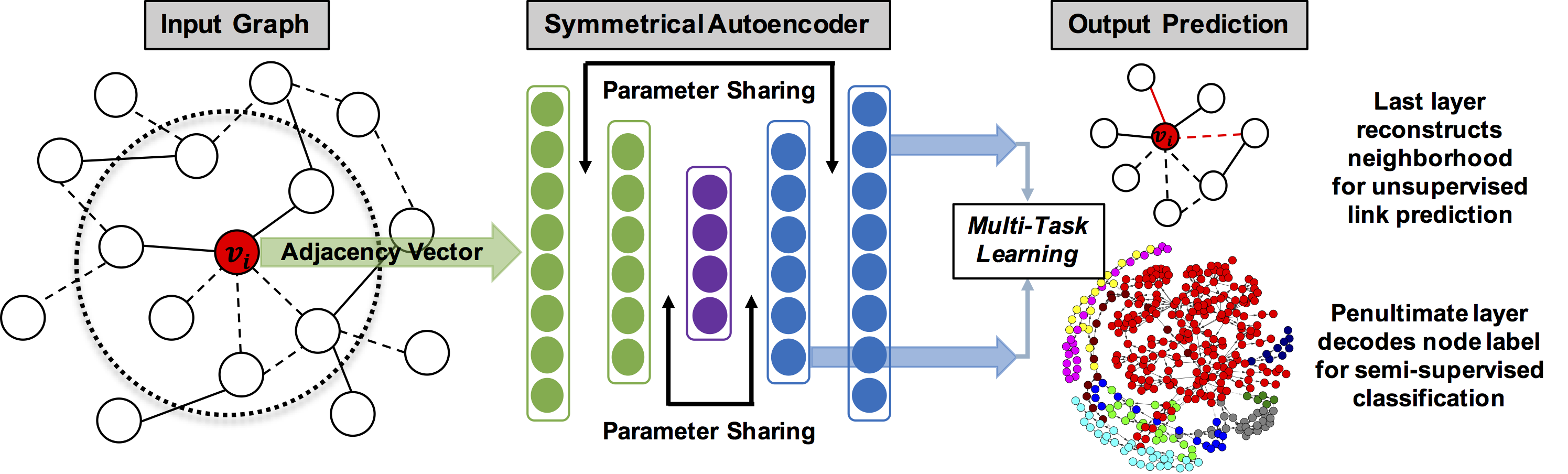

We examine two fundamental tasks associated with graph representation learning: link prediction and node classification. We present a new autoencoder architecture capable of learning a joint representation of local graph structure and available node features for the simultaneous multi-task learning of unsupervised link prediction and semi-supervised node classification. Our simple, yet effective and versatile model is efficiently trained end-to-end in a single stage, whereas previous related deep graph embedding methods require multiple training steps that are difficult to optimize. We provide an empirical evaluation of our model on five benchmark relational, graph-structured datasets and demonstrate significant improvement over three strong baselines for graph representation learning. Reference code and data are available at https://github.com/vuptran/graph-representation-learning

PDF Abstract

Cora

Cora