Multilevel Wavelet Decomposition Network for Interpretable Time Series Analysis

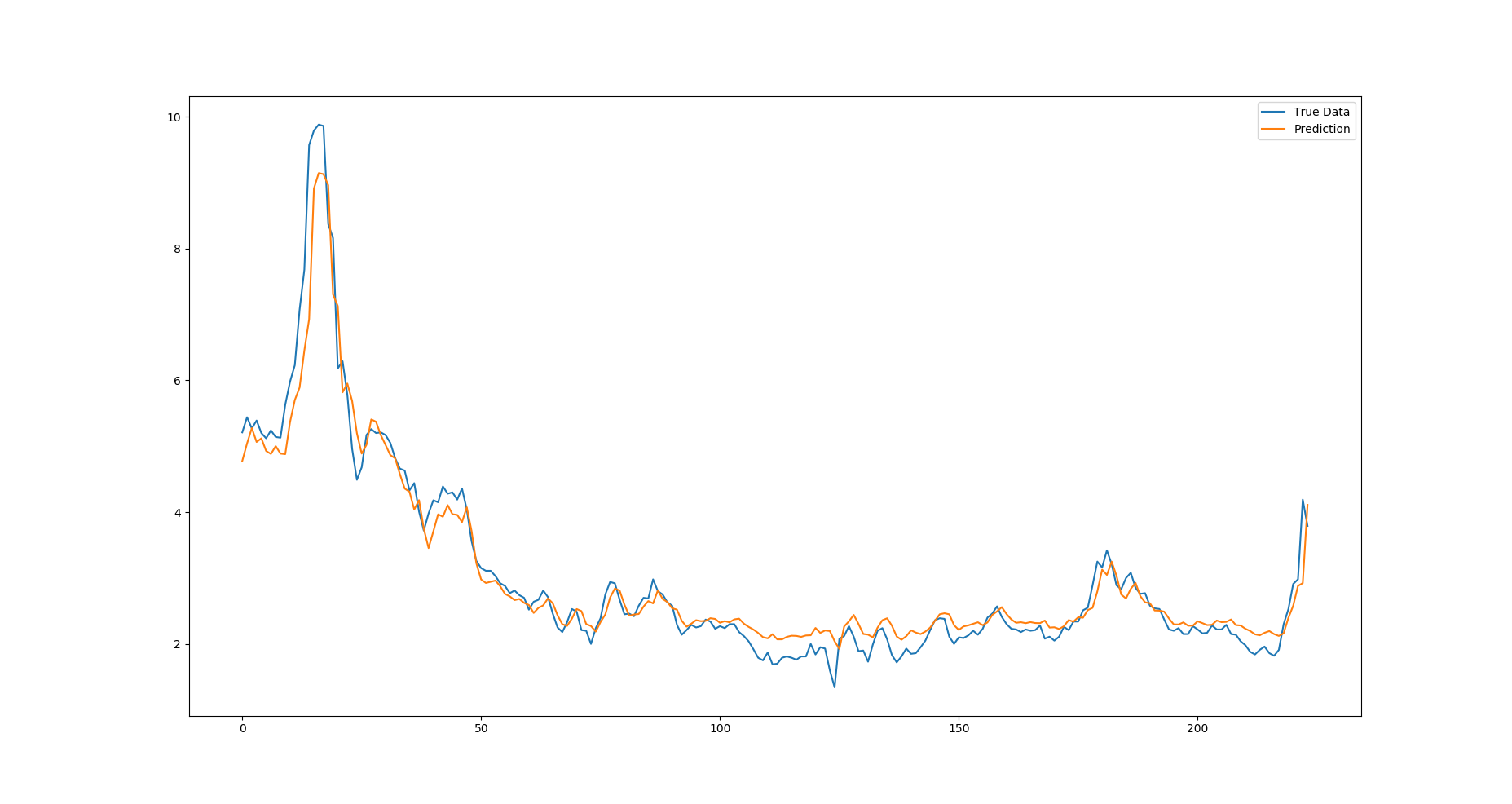

Recent years have witnessed the unprecedented rising of time series from almost all kindes of academic and industrial fields. Various types of deep neural network models have been introduced to time series analysis, but the important frequency information is yet lack of effective modeling. In light of this, in this paper we propose a wavelet-based neural network structure called multilevel Wavelet Decomposition Network (mWDN) for building frequency-aware deep learning models for time series analysis. mWDN preserves the advantage of multilevel discrete wavelet decomposition in frequency learning while enables the fine-tuning of all parameters under a deep neural network framework. Based on mWDN, we further propose two deep learning models called Residual Classification Flow (RCF) and multi-frequecy Long Short-Term Memory (mLSTM) for time series classification and forecasting, respectively. The two models take all or partial mWDN decomposed sub-series in different frequencies as input, and resort to the back propagation algorithm to learn all the parameters globally, which enables seamless embedding of wavelet-based frequency analysis into deep learning frameworks. Extensive experiments on 40 UCR datasets and a real-world user volume dataset demonstrate the excellent performance of our time series models based on mWDN. In particular, we propose an importance analysis method to mWDN based models, which successfully identifies those time-series elements and mWDN layers that are crucially important to time series analysis. This indeed indicates the interpretability advantage of mWDN, and can be viewed as an indepth exploration to interpretable deep learning.

PDF Abstract