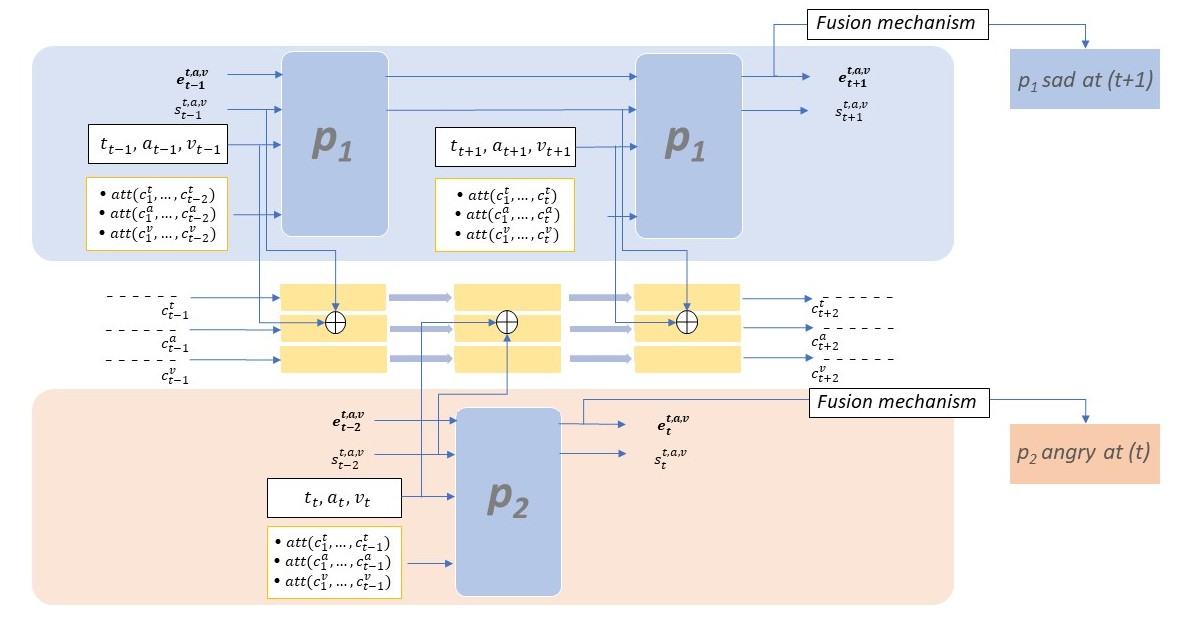

Multilogue-Net: A Context Aware RNN for Multi-modal Emotion Detection and Sentiment Analysis in Conversation

Sentiment Analysis and Emotion Detection in conversation is key in several real-world applications, with an increase in modalities available aiding a better understanding of the underlying emotions. Multi-modal Emotion Detection and Sentiment Analysis can be particularly useful, as applications will be able to use specific subsets of available modalities, as per the available data. Current systems dealing with Multi-modal functionality fail to leverage and capture - the context of the conversation through all modalities, the dependency between the listener(s) and speaker emotional states, and the relevance and relationship between the available modalities. In this paper, we propose an end to end RNN architecture that attempts to take into account all the mentioned drawbacks. Our proposed model, at the time of writing, out-performs the state of the art on a benchmark dataset on a variety of accuracy and regression metrics.

PDF Abstract arXiv preprint 2020 PDF arXiv preprint 2020 AbstractDatasets

Results from the Paper

Ranked #8 on

Multimodal Sentiment Analysis

on CMU-MOSEI

(using extra training data)

Ranked #8 on

Multimodal Sentiment Analysis

on CMU-MOSEI

(using extra training data)

CMU-MOSEI

CMU-MOSEI