Neural Canonical Transformation with Symplectic Flows

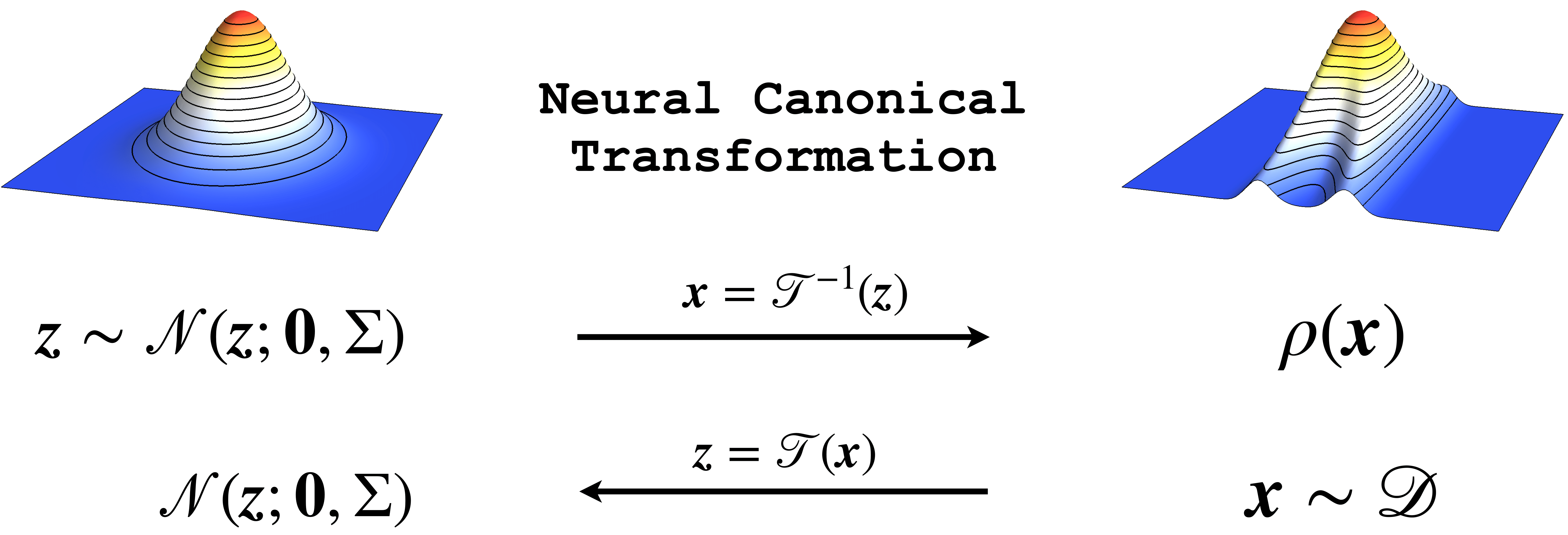

Canonical transformation plays a fundamental role in simplifying and solving classical Hamiltonian systems. We construct flexible and powerful canonical transformations as generative models using symplectic neural networks. The model transforms physical variables towards a latent representation with an independent harmonic oscillator Hamiltonian. Correspondingly, the phase space density of the physical system flows towards a factorized Gaussian distribution in the latent space. Since the canonical transformation preserves the Hamiltonian evolution, the model captures nonlinear collective modes in the learned latent representation. We present an efficient implementation of symplectic neural coordinate transformations and two ways to train the model. The variational free energy calculation is based on the analytical form of physical Hamiltonian. While the phase space density estimation only requires samples in the coordinate space for separable Hamiltonians. We demonstrate appealing features of neural canonical transformation using toy problems including two-dimensional ring potential and harmonic chain. Finally, we apply the approach to real-world problems such as identifying slow collective modes in alanine dipeptide and conceptual compression of the MNIST dataset.

PDF Abstract