Neural Graph Matching for Pre-training Graph Neural Networks

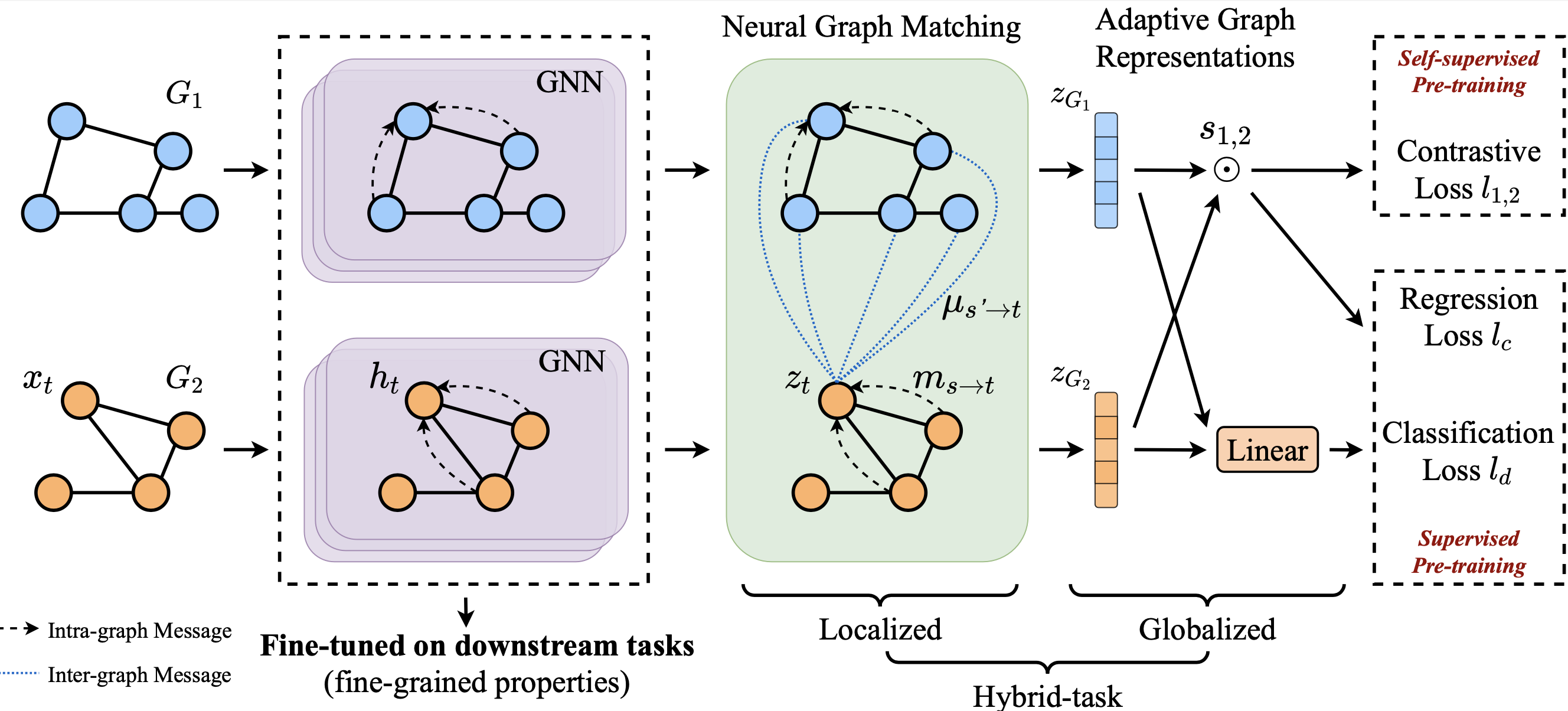

Recently, graph neural networks (GNNs) have been shown powerful capacity at modeling structural data. However, when adapted to downstream tasks, it usually requires abundant task-specific labeled data, which can be extremely scarce in practice. A promising solution to data scarcity is to pre-train a transferable and expressive GNN model on large amounts of unlabeled graphs or coarse-grained labeled graphs. Then the pre-trained GNN is fine-tuned on downstream datasets with task-specific fine-grained labels. In this paper, we present a novel Graph Matching based GNN Pre-Training framework, called GMPT. Focusing on a pair of graphs, we propose to learn structural correspondences between them via neural graph matching, consisting of both intra-graph message passing and inter-graph message passing. In this way, we can learn adaptive representations for a given graph when paired with different graphs, and both node- and graph-level characteristics are naturally considered in a single pre-training task. The proposed method can be applied to fully self-supervised pre-training and coarse-grained supervised pre-training. We further propose an approximate contrastive training strategy to significantly reduce time/memory consumption. Extensive experiments on multi-domain, out-of-distribution benchmarks have demonstrated the effectiveness of our approach. The code is available at: https://github.com/RUCAIBox/GMPT.

PDF Abstract