Novel View Synthesis on Unpaired Data by Conditional Deformable Variational Auto-Encoder

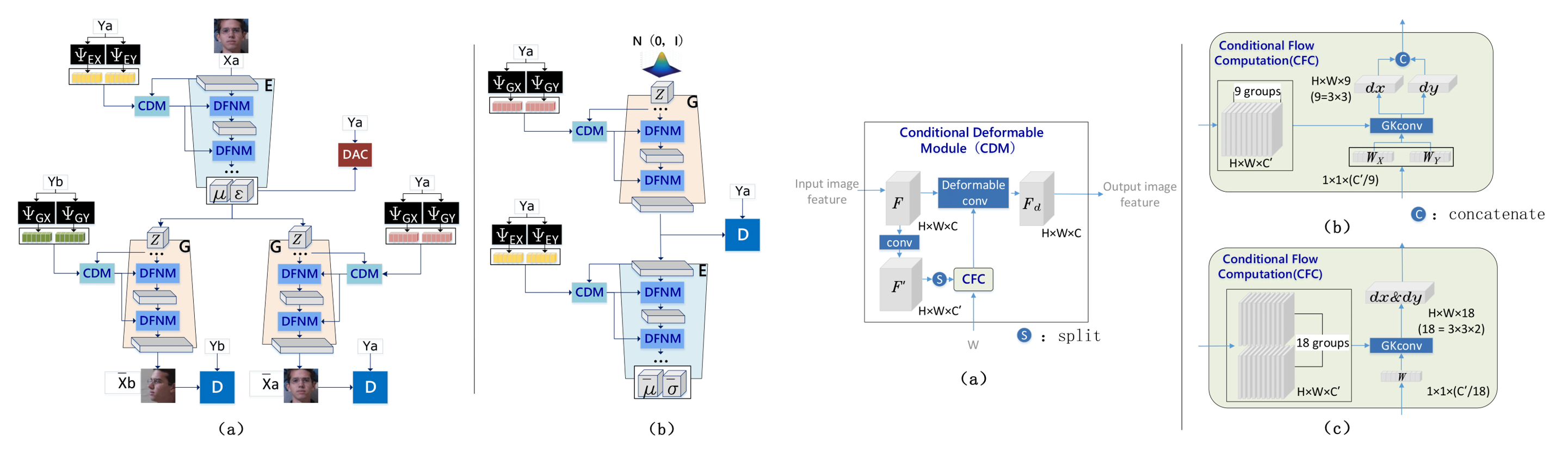

Novel view synthesis often needs the paired data from both the source and target views. This paper proposes a view translation model under cVAE-GAN framework without requiring the paired data. We design a conditional deformable module (CDM) which uses the view condition vectors as the filters to convolve the feature maps of the main branch in VAE. It generates several pairs of displacement maps to deform the features, like the 2D optical flows. The results are fed into the deformed feature based normalization module (DFNM), which scales and offsets the main branch feature, given its deformed one as the input from the side branch. Taking the advantage of the CDM and DFNM, the encoder outputs a view-irrelevant posterior, while the decoder takes the code drawn from it to synthesize the reconstructed and the viewtranslated images. To further ensure the disentanglement between the views and other factors, we add adversarial training on the code. The results and ablation studies on MultiPIE and 3D chair datasets validate the effectiveness of the framework in cVAE and the designed module.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

Multi-PIE

Multi-PIE

3D Chairs

3D Chairs