On Calibrating Semantic Segmentation Models: Analyses and An Algorithm

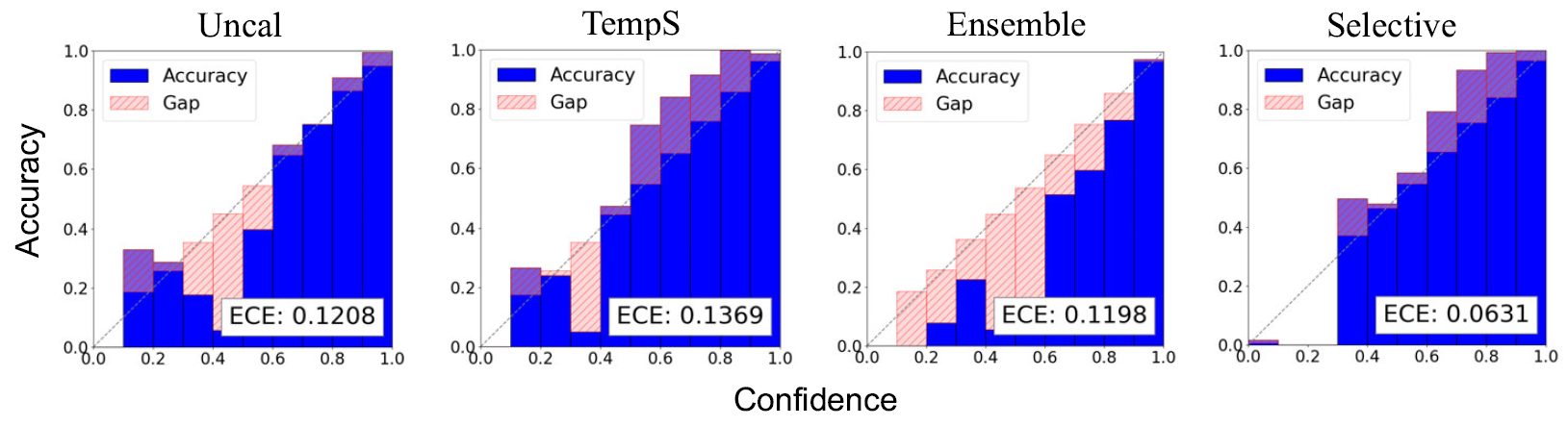

We study the problem of semantic segmentation calibration. Lots of solutions have been proposed to approach model miscalibration of confidence in image classification. However, to date, confidence calibration research on semantic segmentation is still limited. We provide a systematic study on the calibration of semantic segmentation models and propose a simple yet effective approach. First, we find that model capacity, crop size, multi-scale testing, and prediction correctness have impact on calibration. Among them, prediction correctness, especially misprediction, is more important to miscalibration due to over-confidence. Next, we propose a simple, unifying, and effective approach, namely selective scaling, by separating correct/incorrect prediction for scaling and more focusing on misprediction logit smoothing. Then, we study popular existing calibration methods and compare them with selective scaling on semantic segmentation calibration. We conduct extensive experiments with a variety of benchmarks on both in-domain and domain-shift calibration and show that selective scaling consistently outperforms other methods.

PDF Abstract CVPR 2023 PDF CVPR 2023 Abstract

Cityscapes

Cityscapes

ADE20K

ADE20K

DAVIS

DAVIS

SYNTHIA

SYNTHIA

BDD100K

BDD100K

DAVIS 2016

DAVIS 2016