On the stability analysis of deep neural network representations of an optimal state-feedback

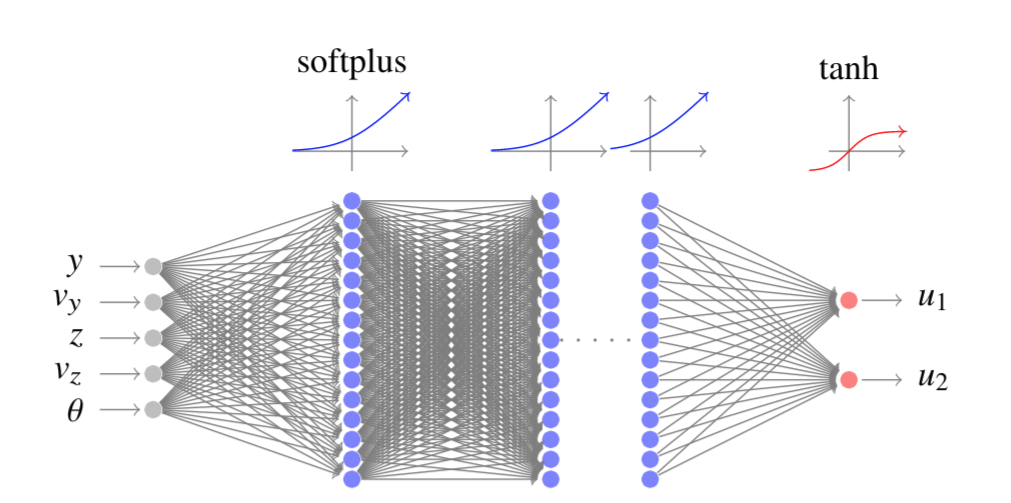

Recent work have shown how the optimal state-feedback, obtained as the solution to the Hamilton-Jacobi-Bellman equations, can be approximated for several nonlinear, deterministic systems by deep neural networks. When imitation (supervised) learning is used to train the neural network on optimal state-action pairs, for instance as derived by applying Pontryagin's theory of optimal processes, the resulting model is referred here as the guidance and control network. In this work, we analyze the stability of nonlinear and deterministic systems controlled by such networks. We then propose a method utilising differential algebraic techniques and high-order Taylor maps to gain information on the stability of the neurocontrolled state trajectories. We exemplify the proposed methods in the case of the two-dimensional dynamics of a quadcopter controlled to reach the origin and we study how different architectures of the guidance and control network affect the stability of the target equilibrium point and the stability margins to time delay. Moreover, we show how to study the robustness to initial conditions of a nominal trajectory, using a Taylor representation of the neurocontrolled neighbouring trajectories.

PDF Abstract