On the Unreasonable Effectiveness of Centroids in Image Retrieval

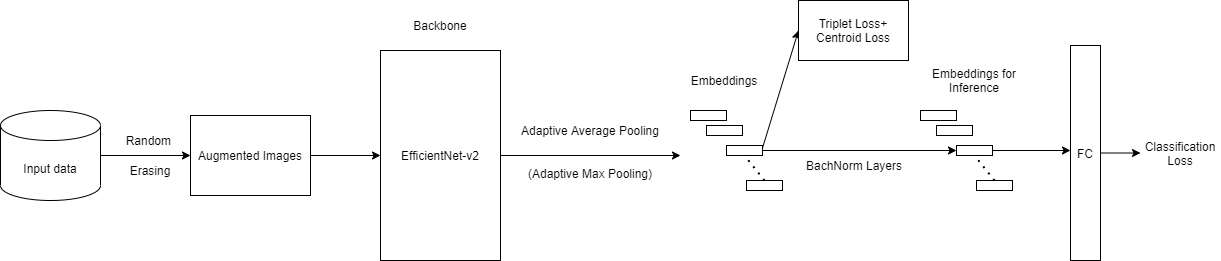

Image retrieval task consists of finding similar images to a query image from a set of gallery (database) images. Such systems are used in various applications e.g. person re-identification (ReID) or visual product search. Despite active development of retrieval models it still remains a challenging task mainly due to large intra-class variance caused by changes in view angle, lighting, background clutter or occlusion, while inter-class variance may be relatively low. A large portion of current research focuses on creating more robust features and modifying objective functions, usually based on Triplet Loss. Some works experiment with using centroid/proxy representation of a class to alleviate problems with computing speed and hard samples mining used with Triplet Loss. However, these approaches are used for training alone and discarded during the retrieval stage. In this paper we propose to use the mean centroid representation both during training and retrieval. Such an aggregated representation is more robust to outliers and assures more stable features. As each class is represented by a single embedding - the class centroid - both retrieval time and storage requirements are reduced significantly. Aggregating multiple embeddings results in a significant reduction of the search space due to lowering the number of candidate target vectors, which makes the method especially suitable for production deployments. Comprehensive experiments conducted on two ReID and Fashion Retrieval datasets demonstrate effectiveness of our method, which outperforms the current state-of-the-art. We propose centroid training and retrieval as a viable method for both Fashion Retrieval and ReID applications.

PDF AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Image Retrieval | DeepFashion - Consumer-to-shop | CTL Model (ResNet50-IBN-A, 320x320) | mAP | 49.2 | # 1 | |

| Rank-1 | 37.3 | # 2 | ||||

| Rank-10 | 71.2 | # 1 | ||||

| Rank-20 | 77.7 | # 1 | ||||

| Rank-50 | 85.0 | # 1 | ||||

| Image Retrieval | DeepFashion - Consumer-to-shop | CTL Model (ResNet50, 256x128) | mAP | 40.4 | # 3 | |

| Rank-1 | 29.4 | # 3 | ||||

| Rank-10 | 61.3 | # 3 | ||||

| Rank-20 | 68.9 | # 3 | ||||

| Rank-50 | 77.4 | # 3 | ||||

| Person Re-Identification | DukeMTMC-reID | CTL Model (ResNet50, 256x128) | Rank-1 | 95.6 | # 1 | |

| Rank-5 | 96.2 | # 2 | ||||

| Rank-10 | 97.9 | # 1 | ||||

| mAP | 96.1 | # 2 | ||||

| Image Retrieval | Exact Street2Shop | CTL Model (ResNet50-IBN-A, 320x320) | mAP | 59.8 | # 1 | |

| Rank-1 | 53.7 | # 1 | ||||

| Rank-10 | 70.9 | # 1 | ||||

| Rank-20 | 75.0 | # 1 | ||||

| Rank-50 | 79.2 | # 1 | ||||

| Image Retrieval | Exact Street2Shop | CTL Model (ResNet50, 256x128) | mAP | 49.8 | # 2 | |

| Rank-1 | 43.2 | # 3 | ||||

| Rank-10 | 61.9 | # 3 | ||||

| Rank-20 | 66.0 | # 3 | ||||

| Rank-50 | 72.1 | # 2 |

Market-1501

Market-1501

DeepFashion

DeepFashion

DukeMTMC-reID

DukeMTMC-reID