Online Multiple Pedestrians Tracking using Deep Temporal Appearance Matching Association

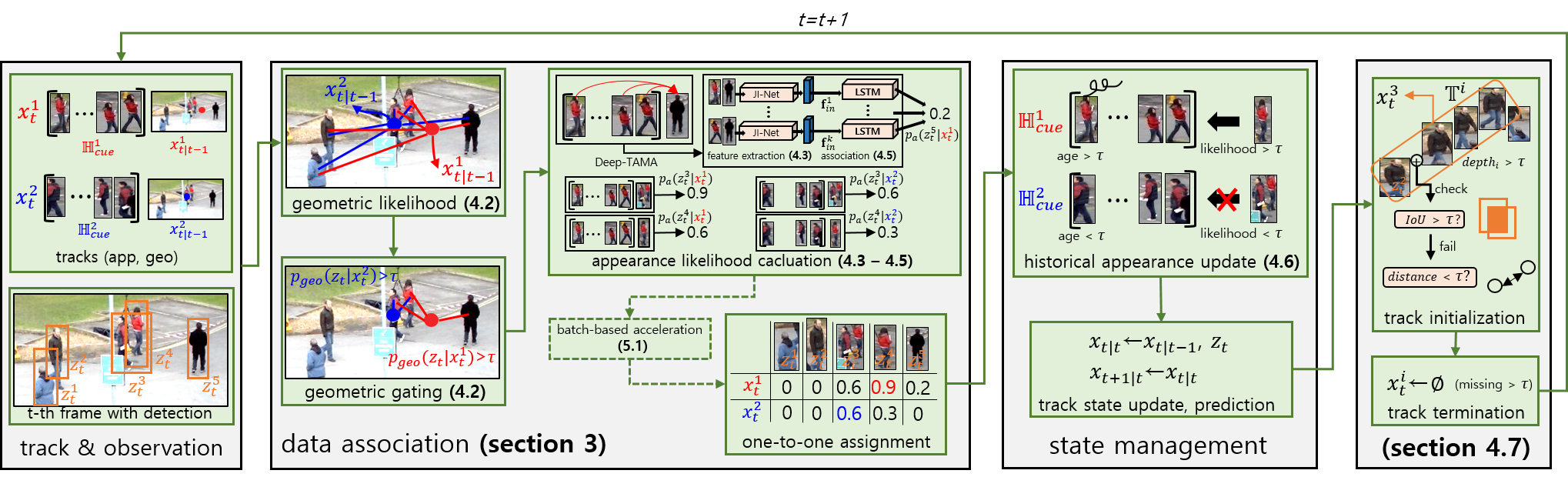

In online multi-target tracking, modeling of appearance and geometric similarities between pedestrians visual scenes is of great importance. The higher dimension of inherent information in the appearance model compared to the geometric model is problematic in many ways. However, due to the recent success of deep-learning-based methods, handling of high-dimensional appearance information becomes feasible. Among many deep neural networks, Siamese network with triplet loss has been widely adopted as an effective appearance feature extractor. Since the Siamese network can extract the features of each input independently, one can update and maintain target-specific features. However, it is not suitable for multi-target settings that require comparison with other inputs. To address this issue, we propose a novel track appearance model based on the joint-inference network. The proposed method enables a comparison of two inputs to be used for adaptive appearance modeling and contributes to the disambiguation of target-observation matching and to the consolidation of identity consistency. Diverse experimental results support the effectiveness of our method. Our work was recognized as the 3rd-best tracker in BMTT MOTChallenge 2019, held at CVPR2019. The code is available at https://github.com/yyc9268/Deep-TAMA.

PDF Abstract

MOT17

MOT17

MOTChallenge

MOTChallenge

MOT16

MOT16