Parameterized Hypercomplex Graph Neural Networks for Graph Classification

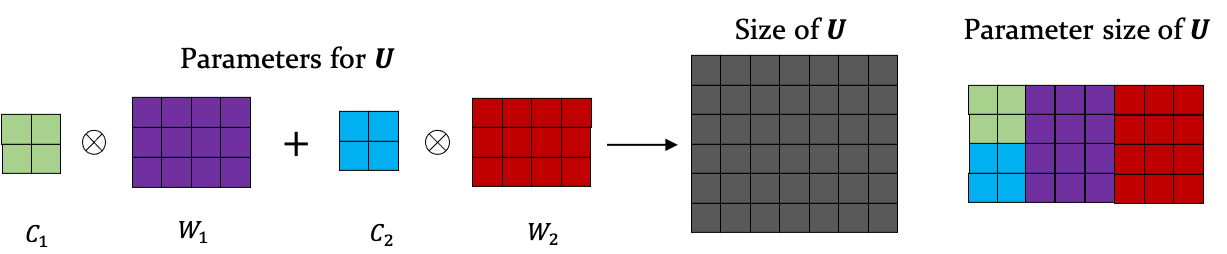

Despite recent advances in representation learning in hypercomplex (HC) space, this subject is still vastly unexplored in the context of graphs. Motivated by the complex and quaternion algebras, which have been found in several contexts to enable effective representation learning that inherently incorporates a weight-sharing mechanism, we develop graph neural networks that leverage the properties of hypercomplex feature transformation. In particular, in our proposed class of models, the multiplication rule specifying the algebra itself is inferred from the data during training. Given a fixed model architecture, we present empirical evidence that our proposed model incorporates a regularization effect, alleviating the risk of overfitting. We also show that for fixed model capacity, our proposed method outperforms its corresponding real-formulated GNN, providing additional confirmation for the enhanced expressivity of HC embeddings. Finally, we test our proposed hypercomplex GNN on several open graph benchmark datasets and show that our models reach state-of-the-art performance while consuming a much lower memory footprint with 70& fewer parameters. Our implementations are available at https://github.com/bayer-science-for-a-better-life/phc-gnn.

PDF AbstractDatasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Graph Property Prediction | ogbg-molhiv | PHC-GNN | Test ROC-AUC | 0.7934 ± 0.0116 | # 23 | |

| Validation ROC-AUC | 0.8217 ± 0.0089 | # 29 | ||||

| Number of params | 110909 | # 35 | ||||

| Ext. data | No | # 1 | ||||

| Graph Property Prediction | ogbg-molpcba | PHC-GNN | Test AP | 0.2947 ± 0.0026 | # 15 | |

| Validation AP | 0.3068 ± 0.0025 | # 11 | ||||

| Number of params | 1690328 | # 28 | ||||

| Ext. data | No | # 1 |

OGB

OGB