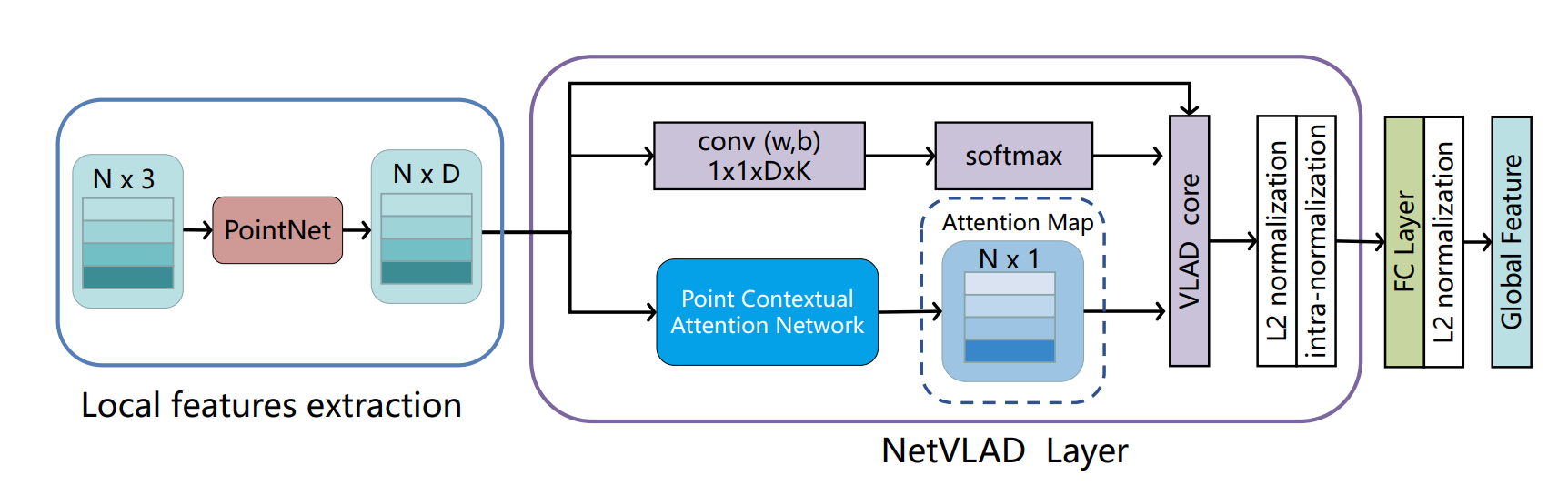

PCAN: 3D Attention Map Learning Using Contextual Information for Point Cloud Based Retrieval

Point cloud based retrieval for place recognition is an emerging problem in vision field. The main challenge is how to find an efficient way to encode the local features into a discriminative global descriptor. In this paper, we propose a Point Contextual Attention Network (PCAN), which can predict the significance of each local point feature based on point context. Our network makes it possible to pay more attention to the task-relevent features when aggregating local features. Experiments on various benchmark datasets show that the proposed network can provide outperformance than current state-of-the-art approaches.

PDF Abstract CVPR 2019 PDF CVPR 2019 AbstractCode

Datasets

Results from the Paper

Ranked #9 on

3D Place Recognition

on Oxford RobotCar Dataset

(AR@1% metric)

Ranked #9 on

3D Place Recognition

on Oxford RobotCar Dataset

(AR@1% metric)

Oxford RobotCar Dataset

Oxford RobotCar Dataset