Phrase-Based Affordance Detection via Cyclic Bilateral Interaction

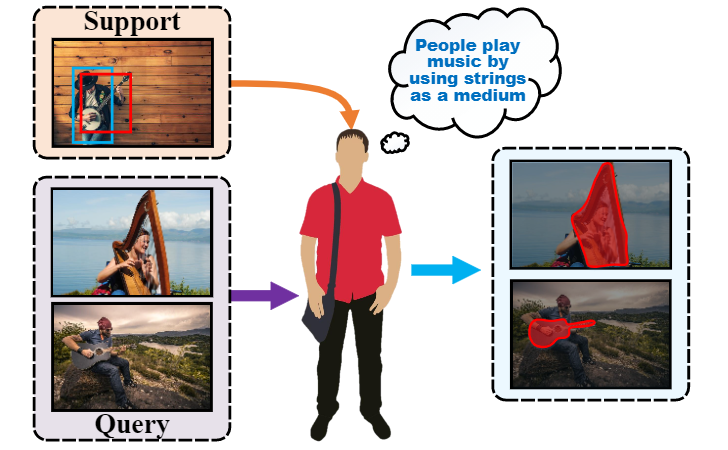

Affordance detection, which refers to perceiving objects with potential action possibilities in images, is a challenging task since the possible affordance depends on the person's purpose in real-world application scenarios. The existing works mainly extract the inherent human-object dependencies from image/video to accommodate affordance properties that change dynamically. In this paper, we explore to perceive affordance from a vision-language perspective and consider the challenging phrase-based affordance detection problem,i.e., given a set of phrases describing the action purposes, all the object regions in a scene with the same affordance should be detected. To this end, we propose a cyclic bilateral consistency enhancement network (CBCE-Net) to align language and vision features progressively. Specifically, the presented CBCE-Net consists of a mutual guided vision-language module that updates the common features of vision and language in a progressive manner, and a cyclic interaction module (CIM) that facilitates the perception of possible interaction with objects in a cyclic manner. In addition, we extend the public Purpose-driven Affordance Dataset (PAD) by annotating affordance categories with short phrases. The contrastive experimental results demonstrate the superiority of our method over nine typical methods from four relevant fields in terms of both objective metrics and visual quality. The related code and dataset will be released at \url{https://github.com/lulsheng/CBCE-Net}.

PDF Abstract

PAD

PAD