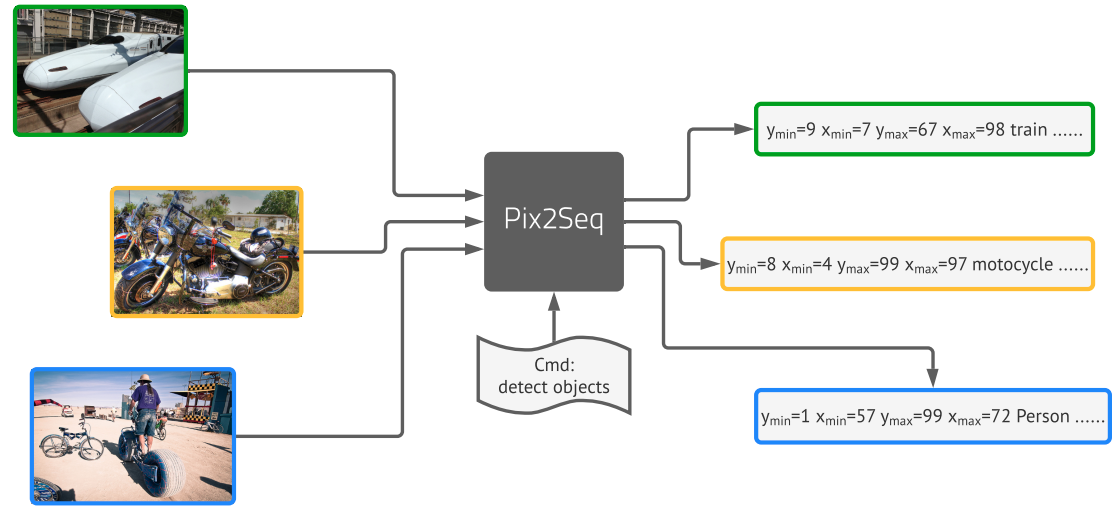

Pix2seq: A Language Modeling Framework for Object Detection

We present Pix2Seq, a simple and generic framework for object detection. Unlike existing approaches that explicitly integrate prior knowledge about the task, we cast object detection as a language modeling task conditioned on the observed pixel inputs. Object descriptions (e.g., bounding boxes and class labels) are expressed as sequences of discrete tokens, and we train a neural network to perceive the image and generate the desired sequence. Our approach is based mainly on the intuition that if a neural network knows about where and what the objects are, we just need to teach it how to read them out. Beyond the use of task-specific data augmentations, our approach makes minimal assumptions about the task, yet it achieves competitive results on the challenging COCO dataset, compared to highly specialized and well optimized detection algorithms.

PDF Abstract ICLR 2022 PDF ICLR 2022 AbstractCode

Datasets

Results from the Paper

Ranked #77 on

Object Detection

on COCO minival

(using extra training data)

Ranked #77 on

Object Detection

on COCO minival

(using extra training data)

MS COCO

MS COCO

Objects365

Objects365