Pose Guided Person Image Generation

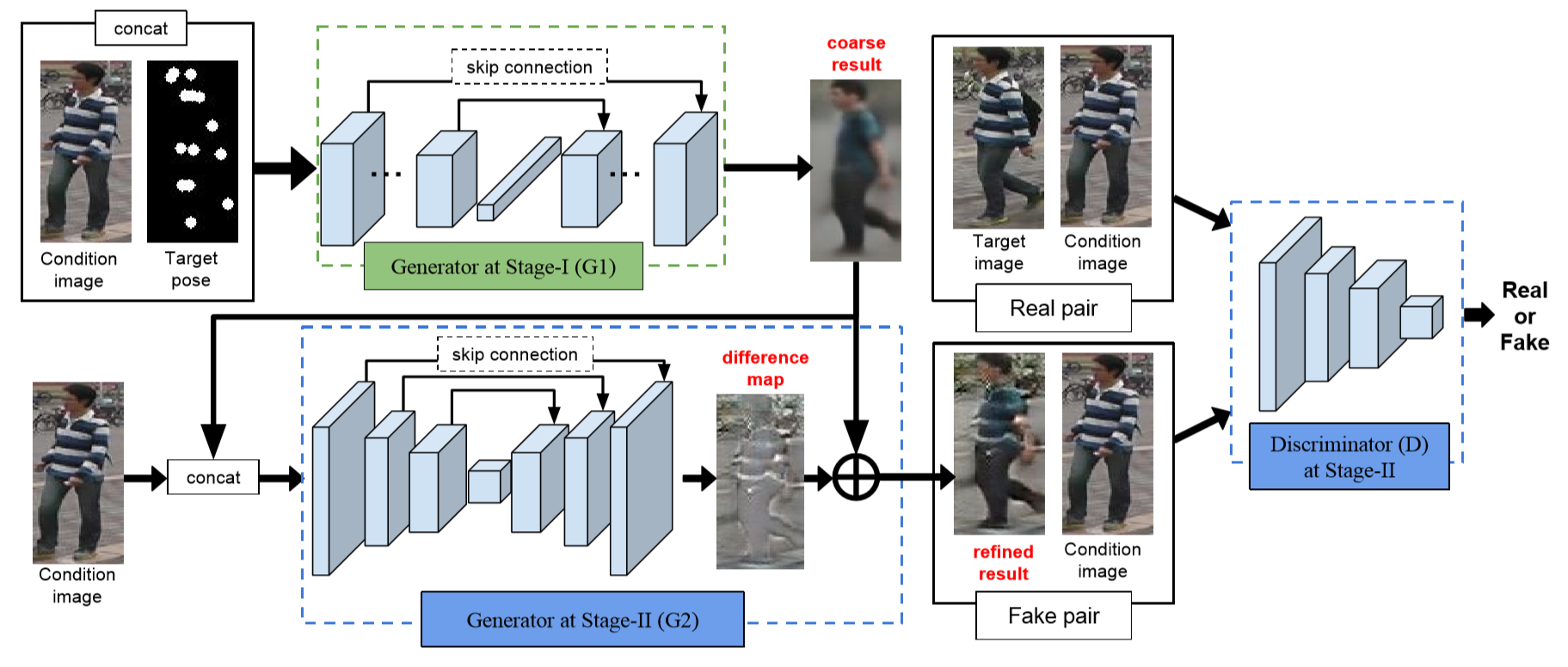

This paper proposes the novel Pose Guided Person Generation Network (PG$^2$) that allows to synthesize person images in arbitrary poses, based on an image of that person and a novel pose. Our generation framework PG$^2$ utilizes the pose information explicitly and consists of two key stages: pose integration and image refinement. In the first stage the condition image and the target pose are fed into a U-Net-like network to generate an initial but coarse image of the person with the target pose. The second stage then refines the initial and blurry result by training a U-Net-like generator in an adversarial way. Extensive experimental results on both 128$\times$64 re-identification images and 256$\times$256 fashion photos show that our model generates high-quality person images with convincing details.

PDF Abstract NeurIPS 2017 PDF NeurIPS 2017 Abstract

Market-1501

Market-1501

DeepFashion

DeepFashion

In-Shop

In-Shop