Positional Normalization

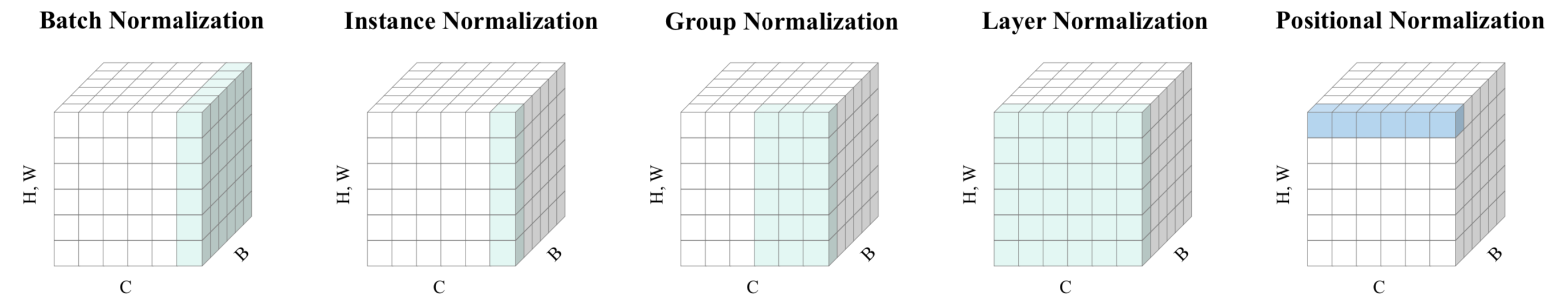

A popular method to reduce the training time of deep neural networks is to normalize activations at each layer. Although various normalization schemes have been proposed, they all follow a common theme: normalize across spatial dimensions and discard the extracted statistics. In this paper, we propose an alternative normalization method that noticeably departs from this convention and normalizes exclusively across channels. We argue that the channel dimension is naturally appealing as it allows us to extract the first and second moments of features extracted at a particular image position. These moments capture structural information about the input image and extracted features, which opens a new avenue along which a network can benefit from feature normalization: Instead of disregarding the normalization constants, we propose to re-inject them into later layers to preserve or transfer structural information in generative networks. Codes are available at https://github.com/Boyiliee/PONO.

PDF Abstract NeurIPS 2019 PDF NeurIPS 2019 Abstract

ImageNet

ImageNet

Cityscapes

Cityscapes