Predicting Prosodic Prominence from Text with Pre-trained Contextualized Word Representations

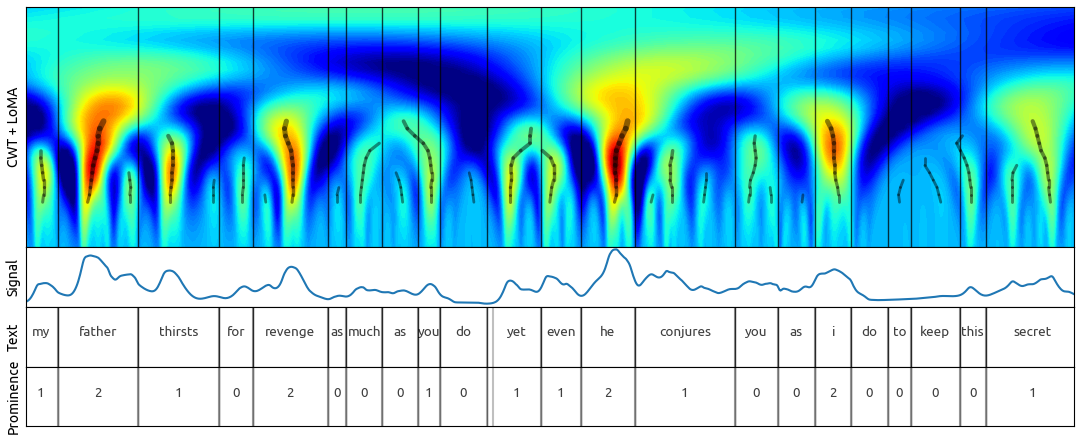

In this paper we introduce a new natural language processing dataset and benchmark for predicting prosodic prominence from written text. To our knowledge this will be the largest publicly available dataset with prosodic labels. We describe the dataset construction and the resulting benchmark dataset in detail and train a number of different models ranging from feature-based classifiers to neural network systems for the prediction of discretized prosodic prominence. We show that pre-trained contextualized word representations from BERT outperform the other models even with less than 10% of the training data. Finally we discuss the dataset in light of the results and point to future research and plans for further improving both the dataset and methods of predicting prosodic prominence from text. The dataset and the code for the models are publicly available.

PDF Abstract WS (NoDaLiDa) 2019 PDF WS (NoDaLiDa) 2019 Abstract

Helsinki Prosody Corpus

Helsinki Prosody Corpus

LibriSpeech

LibriSpeech