Progression Modelling for Online and Early Gesture Detection

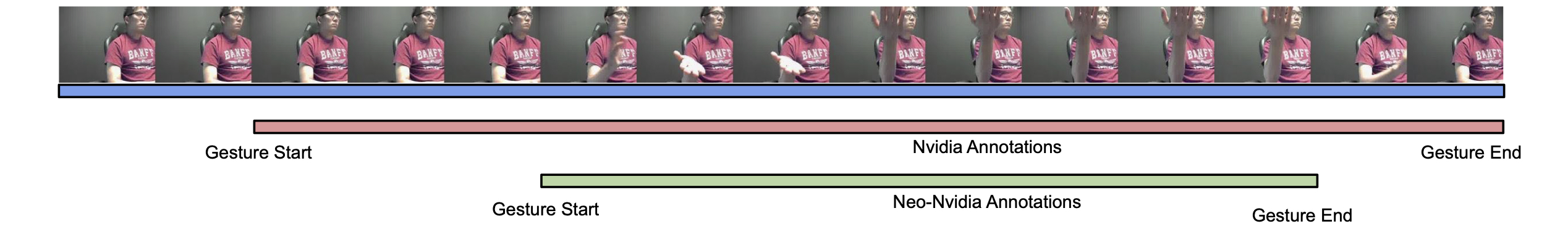

Online and Early detection of gestures is crucial for building touchless gesture based interfaces. These interfaces should operate on a stream of video frames instead of the complete video and detect the presence of gestures at an earlier stage than post-completion for providing real time user experience. To achieve this, it is important to recognize the progression of the gesture across different stages so that appropriate responses can be triggered on reaching the desired execution stage. To address this, we propose a simple yet effective multi-task learning framework which models the progression of the gesture along with frame level recognition. The proposed framework recognizes the gestures at an early stage with high precision and also achieves state-of-the-art recognition accuracy of 87.8% which is closer to human accuracy of 88.4% on the NVIDIA gesture dataset in the offline configuration and advances the state-of-the-art by more than 4%. We also introduce tightly segmented annotations for the NVIDIA gesture dataset and setup a strong baseline for gesture localization for this dataset. We also evaluate our framework on the Montalbano dataset and report competitive results.

PDF Abstract