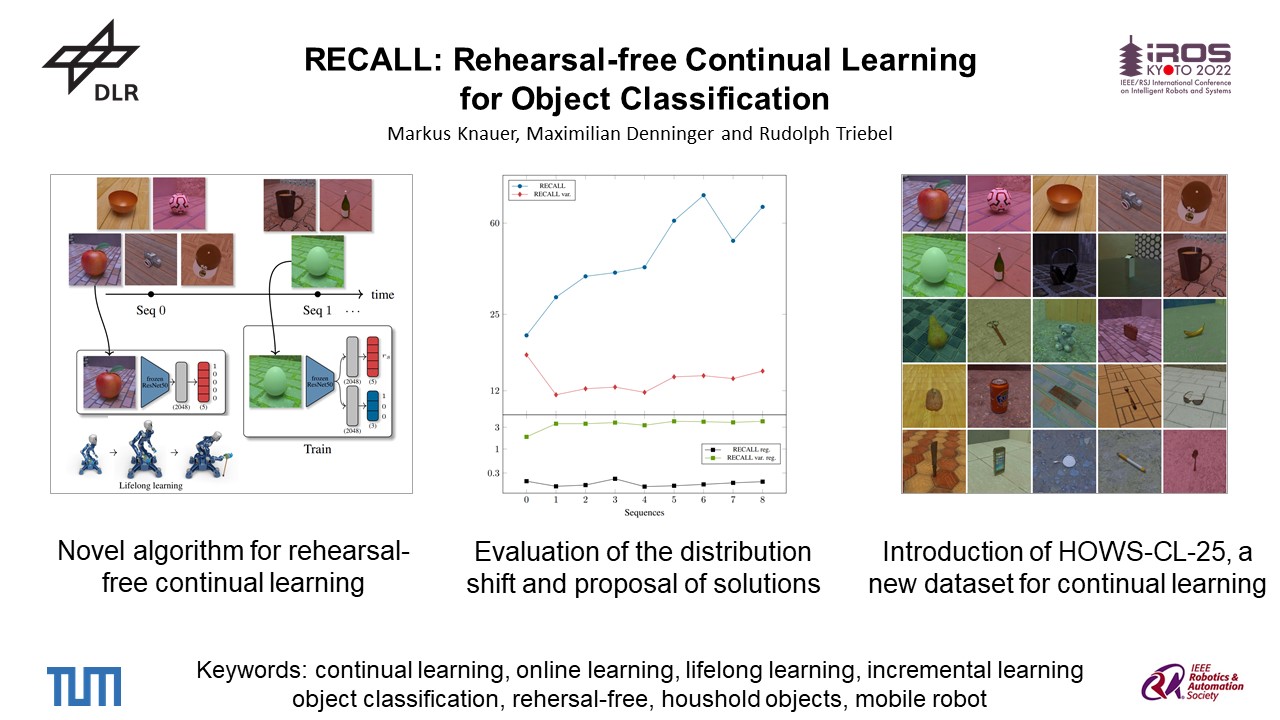

RECALL: Rehearsal-free Continual Learning for Object Classification

Convolutional neural networks show remarkable results in classification but struggle with learning new things on the fly. We present a novel rehearsal-free approach, where a deep neural network is continually learning new unseen object categories without saving any data of prior sequences. Our approach is called RECALL, as the network recalls categories by calculating logits for old categories before training new ones. These are then used during training to avoid changing the old categories. For each new sequence, a new head is added to accommodate the new categories. To mitigate forgetting, we present a regularization strategy where we replace the classification with a regression. Moreover, for the known categories, we propose a Mahalanobis loss that includes the variances to account for the changing densities between known and unknown categories. Finally, we present a novel dataset for continual learning, especially suited for object recognition on a mobile robot (HOWS-CL-25), including 150,795 synthetic images of 25 household object categories. Our approach RECALL outperforms the current state of the art on CORe50 and iCIFAR-100 and reaches the best performance on HOWS-CL-25.

PDF Abstract

HOWS

HOWS

ImageNet

ImageNet

CIFAR-100

CIFAR-100

CORe50

CORe50