RegionCLIP: Region-based Language-Image Pretraining

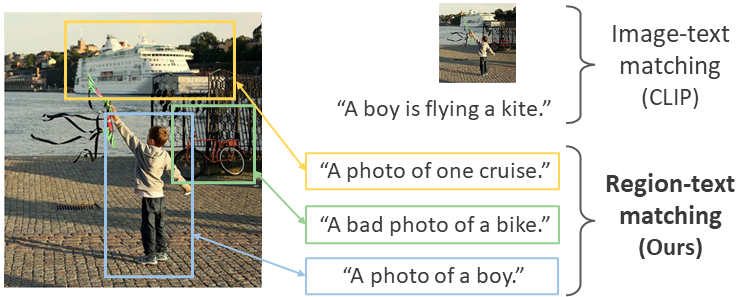

Contrastive language-image pretraining (CLIP) using image-text pairs has achieved impressive results on image classification in both zero-shot and transfer learning settings. However, we show that directly applying such models to recognize image regions for object detection leads to poor performance due to a domain shift: CLIP was trained to match an image as a whole to a text description, without capturing the fine-grained alignment between image regions and text spans. To mitigate this issue, we propose a new method called RegionCLIP that significantly extends CLIP to learn region-level visual representations, thus enabling fine-grained alignment between image regions and textual concepts. Our method leverages a CLIP model to match image regions with template captions and then pretrains our model to align these region-text pairs in the feature space. When transferring our pretrained model to the open-vocabulary object detection tasks, our method significantly outperforms the state of the art by 3.8 AP50 and 2.2 AP for novel categories on COCO and LVIS datasets, respectively. Moreoever, the learned region representations support zero-shot inference for object detection, showing promising results on both COCO and LVIS datasets. Our code is available at https://github.com/microsoft/RegionCLIP.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Results from the Paper

Ranked #11 on

Open Vocabulary Object Detection

on MSCOCO

(using extra training data)

Ranked #11 on

Open Vocabulary Object Detection

on MSCOCO

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Open Vocabulary Object Detection | LVIS v1.0 | Region-CLIP (RN50x4-C4) | AP novel-LVIS base training | 22.0 | # 13 | ||

| Open Vocabulary Object Detection | LVIS v1.0 | Region-CLIP (RN50-C4) | AP novel-LVIS base training | 17.1 | # 20 | ||

| Open Vocabulary Object Detection | MSCOCO | Region-CLIP (RN50x4-C4) | AP 0.5 | 39.3 | # 11 | ||

| Open Vocabulary Object Detection | MSCOCO | Region-CLIP (RN50-C4) | AP 0.5 | 31.4 | # 18 |

ImageNet

ImageNet

MS COCO

MS COCO

LVIS

LVIS