Reinforcement Learning via Fenchel-Rockafellar Duality

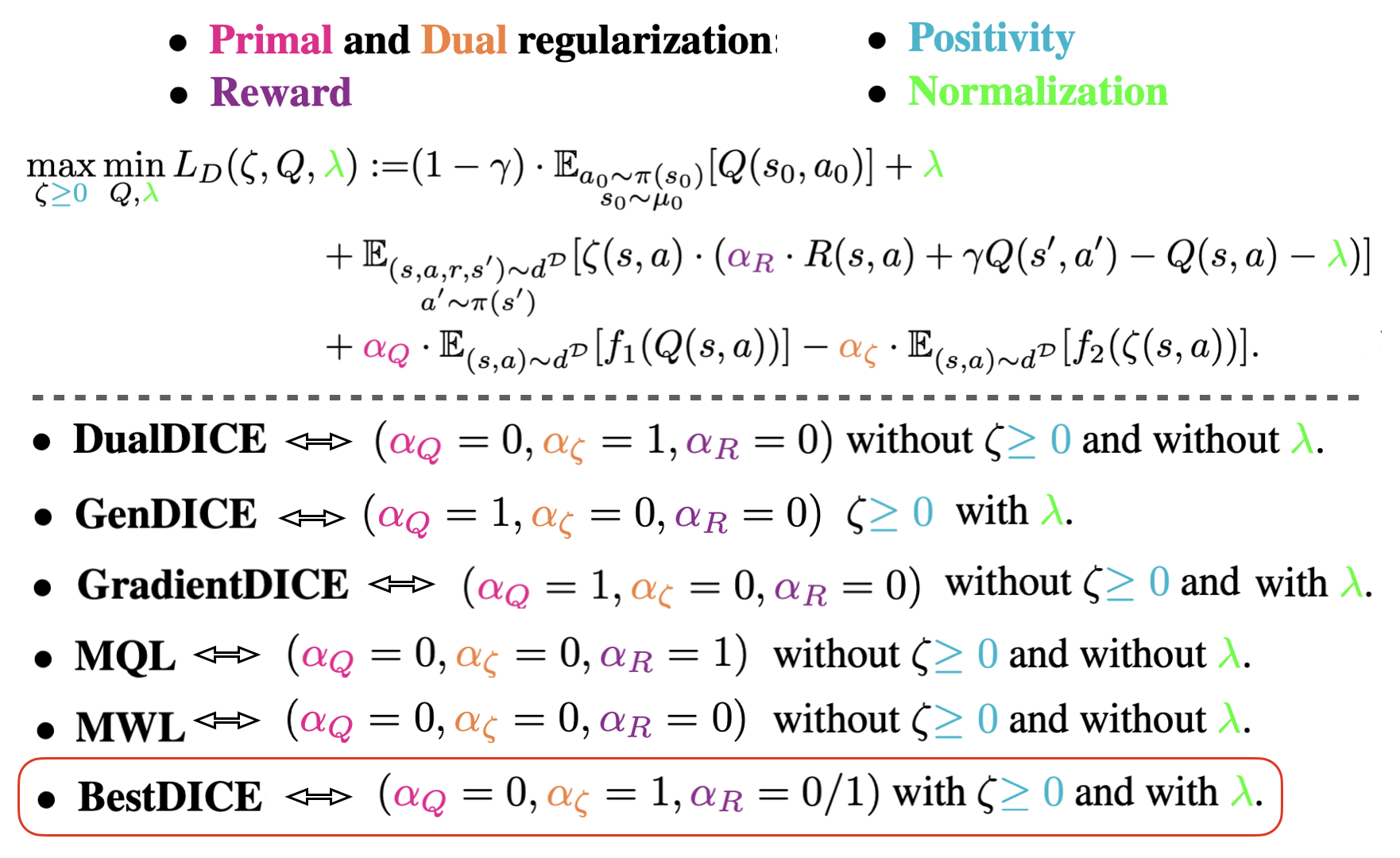

We review basic concepts of convex duality, focusing on the very general and supremely useful Fenchel-Rockafellar duality. We summarize how this duality may be applied to a variety of reinforcement learning (RL) settings, including policy evaluation or optimization, online or offline learning, and discounted or undiscounted rewards. The derivations yield a number of intriguing results, including the ability to perform policy evaluation and on-policy policy gradient with behavior-agnostic offline data and methods to learn a policy via max-likelihood optimization. Although many of these results have appeared previously in various forms, we provide a unified treatment and perspective on these results, which we hope will enable researchers to better use and apply the tools of convex duality to make further progress in RL.

PDF Abstract