Rethinking the Evaluation of Unbiased Scene Graph Generation

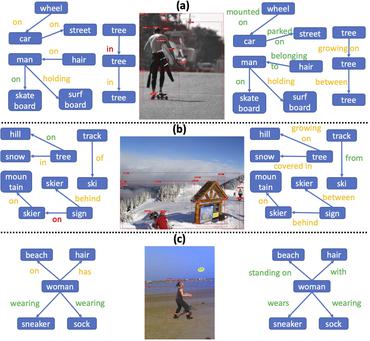

Current Scene Graph Generation (SGG) methods tend to predict frequent predicate categories and fail to recognize rare ones due to the severe imbalanced distribution of predicates. To improve the robustness of SGG models on different predicate categories, recent research has focused on unbiased SGG and adopted mean Recall@K (mR@K) as the main evaluation metric. However, we discovered two overlooked issues about this de facto standard metric, which makes current unbiased SGG evaluation vulnerable and unfair: 1) mR@K neglects the correlations among predicates and unintentionally breaks category independence when ranking all the triplet predictions together regardless of the predicate categories. 2) mR@K neglects the compositional diversity of different predicates and assigns excessively high weights to some oversimple category samples with limited composable relation triplet types. In addition, we investigate the under-explored correlation between objects and predicates, which can serve as a simple but strong baseline for unbiased SGG. In this paper, we refine mR@K and propose two complementary evaluation metrics for unbiased SGG: Independent Mean Recall (MR) and weighted IMR (wIMR). These two metrics are designed by considering the category independence and diversity of composable relation triplets, respectively. We compare the proposed metrics with the de facto standard metrics through extensive experiments and discuss the solutions to evaluate unbiased SGG in a more trustworthy way.

PDF Abstract

Visual Genome

Visual Genome